Table of Contents

- 1. best links

- 2. most frequent math methods

- 3. common terms

- 4. rare terms

- 5. TODO problems classification

- 6. Data Analysis [ə'nælɪsɪs]

- 6.1. TODO open-source tools

- 6.2. dictionary

- 6.3. Steps

- 6.4. 2019 pro https://habr.com/ru/company/JetBrains-education/blog/438058/

- 6.5. EXAMPLES OF ANALYSIS

- 6.6. EDA Exploratory analysis

- 6.7. gradient boostings vs NN

- 6.8. theory

- 6.9. Feature Preparation

- 6.9.1. terms

- 6.9.2. Выбросы Outliers

- 6.9.3. IDs encoding with embaddings

- 6.9.4. Categorical encode

- 6.9.5. отбор признаков feature filtrating

- 6.9.6. imbalanced classes and sampling

- 6.9.7. Skewed numerical feature

- 6.9.8. missing values: NaN, None

- 6.9.9. numerical data to bins

- 6.9.10. Sparse Classes

- 6.9.11. Feature engeering

- 6.9.12. Standardization, Rescale, Normalization

- 6.9.13. feature selection (correlation)

- 6.9.14. links

- 6.10. поиск зависимостей между признаками (Finding relationships among variables) или data mining или Интеллектуальный анализ данных

- 6.11. Корреляционный анализ

- 6.12. Кластерный анализ

- 6.13. Регрессивный линейный анализ - linear regression

- 6.13.1. types

- 6.13.2. parameters estimation methods

- 6.13.3. цели регрессивного анализа

- 6.13.4. требования для регрессивного анализа

- 6.13.5. Linear least squares (LLS) - most simple

- 6.13.6. regularization methods

- 6.13.7. logistic regression (or logit regression)

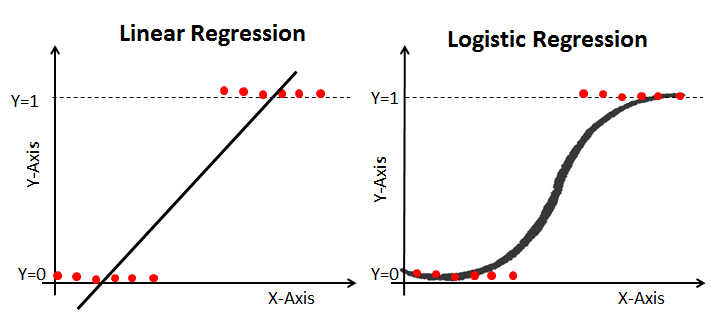

- 6.13.8. Linear Regression Vs. Logistic Regression

- 6.13.9. example1

- 6.13.10. example2

- 6.13.11. links

- 6.14. Факторный анализ

- 6.15. Time Series Analysis

- 6.16. Feature Importance

- 6.17. Малое количество данных

- 6.18. Probability Callibration

- 6.19. Ensembles

- 6.20. Проверка гипотез

- 6.21. Автокорреляция ACF

- 6.22. Оптимизацинные задачи Mathematical Optimization Математическое программирование

- 6.23. Optimization algorithms

- 6.24. виды графиков

- 6.24.1. простые линейные графики с описанием

- 6.24.2. форматирование axis

- 6.24.3. гистограмма

- 6.24.4. box plot

- 6.24.5. bar plot, bar chart

- 6.24.6. Q–Q plot

- 6.24.7. Scatter plot

- 6.24.8. Scatter matrix

- 6.24.9. Correlation Matrix with heatmap

- 6.24.10. PDP

- 6.24.11. pie chart

- 6.24.12. sns.lmplot для 2 столбцов (scatter + regression)

- 6.25. виды графиков по назначению

- 6.26. библиотеки для графиков

- 6.27. тексты

- 6.28. типичное значение

- 6.29. simularity measure - Коэффициент сходства

- 6.30. libs

- 6.31. decision tree

- 6.32. продуктовая аналитика

- 6.33. links

- 7. Information retrieval

- 8. Recommender system

- 9. Machine learning

- 9.1. steps

- 9.2. ensembles theory

- 9.3. Эвристика Heuristics

- 9.4. Энтропия

- 9.5. Artificial general intelligence AGI or strong AI or full AI

- 9.6. Machine learning

- 9.6.1. ML techniques

- 9.6.2. terms

- 9.6.3. Смещение и дисперсия для анализа переобучения

- 9.6.4. Regression vs. classification

- 9.6.5. Reducing Loss (loss function) or cost function or residual

- 9.6.6. Regularization Overfeed problem

- 9.6.7. Sampling

- 9.6.8. CRF Conditional random field

- 9.6.9. типы обучения

- 9.6.10. Training, validation, and test sets

- 9.6.11. с учителем

- 9.6.12. без учителя

- 9.6.13. Structured prediction

- 9.6.14. курс ML Воронцов ШАД http://www.machinelearning.ru

- 9.6.15. метрики metrics

- 9.6.16. TODO problems

- 9.6.17. эконом эффективность

- 9.6.18. Spike-timing-dependent plasticity STDP

- 9.6.19. non-linearity

- 9.6.20. math

- 9.6.21. optimal configuration

- 9.6.22. TODO merging

- 9.6.23. training, Inference mode, frozen state

- 9.6.24. MY NOTES

- 9.6.25. Spatial Transformer Network (STN)

- 9.6.26. Bayesian model averaging

- 9.6.27. residual connection (or skip connection)

- 9.6.28. vanishing gradient problem

- 9.6.29. Multi-task learning(MTL)

- 9.6.30. many classes

- 9.6.31. super-convergence Fast Training with Large Learnign rate

- 9.6.32. One Shot Learning & Triple loss & triple network

- 9.6.33. Design Patterns

- 9.6.34. Evaluation Metrices

- 9.6.35. forecast

- 9.6.36. Machine Learning Crash Course Google https://developers.google.com/machine-learning/crash-course/ml-intro

- 9.6.37. Дилемма смещения–дисперсии Bias–variance tradeoff or Approximation-generalization tradeoff

- 9.6.38. Explainable AI (XAI) and Interpretable Machine Learning (IML) models

- 9.7. Sampling

- 9.8. likelihood, the log-likelihood, and the maximum likelihood estimate

- 9.9. Reinforcement learning (RL)

- 9.10. Distributed training

- 9.11. Federated learning (or collaborative learning)

- 9.12. Statistical classification

- 9.13. Тематическое моделирование

- 9.14. Популярные методы

- 9.15. прогнозирование

- 9.16. Сейчас

- 9.17. kafka

- 9.18. в кредитных орг-ях

- 9.19. TODO Сбербанк проекты

- 9.20. KDTree simular

- 9.21. Применение в банке

- 9.22. вспомогательные математические методы

- 9.23. AutoML

- 9.24. Известные Датасеты

- 9.25. игрушечные датасеты toy datasets

- 9.26. TODO Genetic algorithms

- 9.27. TODO Uplift modelling

- 9.28. A/B test

- 9.29. Regression

- 9.30. Similarity (ˌsiməˈlerədē/)

- 10. Artificial Neural Network and deep learning

- 10.1. TODO flameworks

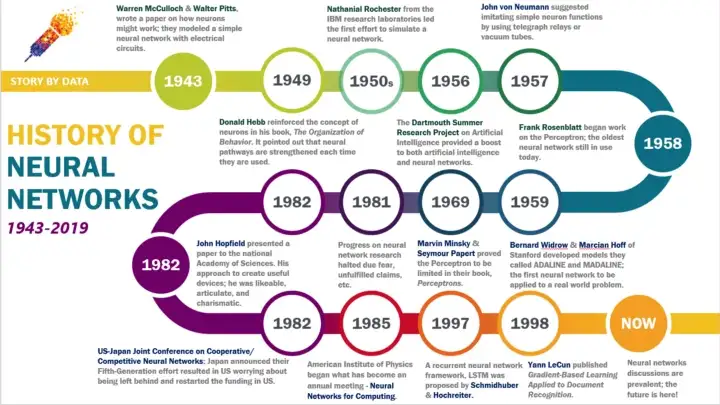

- 10.2. History

- 10.3. Evolution of Deep Learning

- 10.4. persons

- 10.5. Theory basis

- 10.6. STEPS

- 10.7. Конспект универ

- 10.8. Data Augmentation

- 10.9. Major network Architectures

- 10.10. Activation Functions φ(net)

- 10.11. виды сетей и слоев

- 10.12. Layer Normalization and Batch Normalization

- 10.13. hybrid networks

- 10.14. Dynamic Neural Networks

- 10.15. MLP, CNN, RNN, etc.

- 10.16. batch and batch normalization

- 10.17. patterns of design

- 10.18. TODO MultiModal Machine Learning (MMML)

- 10.19. challanges

- 10.20. GAN Generative adversarial network

- 10.21. inerpretation

- 11. Natural Language Processing (NLP)

- 11.1. history

- 11.2. NLP pyramid

- 11.3. Tokenization

- 11.4. Sentiment analysis definition (Liu 2010)

- 11.5. Approaches:

- 11.6. Machine learning steps:

- 11.7. Математические методы анализа текстов

- 11.8. Извлечение именованных сущностей NER (Named-Entity Recognizing)

- 11.9. extracting features

- 11.10. preprocessing

- 11.11. n-gram

- 11.12. Bleu Score and WER Metrics

- 11.13. Levels of analysis:

- 11.14. Universal grammar

- 11.15. Корпус языка

- 11.16. seq2seq model

- 11.17. Рукописные цифры анализ

- 11.18. Fully-parallel text generation for neural machine translation

- 11.19. speaker diarization task

- 11.20. keyword extraction

- 11.21. Approximate string matching or fuzzy string searching

- 11.22. pre-training objective

- 11.23. Principle of compositionality or Frege's principle

- 11.24. 2023 major development

- 11.25. IntellectDialog - автоматизации взаимодействия с клиентами в мессенджерах

- 11.26. Transformers applications for NLP

- 11.27. metrics

- 11.28. RLHF (Reinforcement Learning from Human Feedback)

- 11.29. Language Server

- 11.30. GPT

- 12. LLM, chat bots, conversational AI, intelligent virtual agents (IVAs)

- 12.1. terms

- 12.2. history

- 12.3. free chatgpt api

- 12.4. instruction-following LLMs

- 12.5. DISADVANTAGES AND PROBLEMS

- 12.6. ability to use context from previous interactions to inform their responses to subsequent questions

- 12.7. GigaChat Sber

- 12.8. GPT - Generative Pre-trained Transformer

- 12.9. llama2

- 12.10. frameworks to control control LLM

- 12.11. size optimization

- 12.12. distribute training - choose framework

- 12.13. TODO bots

- 12.14. Fine-tuning

- 12.15. pipeline

- 12.16. tools

- 12.17. LangChain

- 12.18. Most Used Vectorstores

- 12.19. LLM Providers

- 12.20. Promt Engineering vs Train Foundation Models vs Adapters

- 12.21. TODO Named tensor notation.

- 12.22. links

- 13. Adversarial machine learning

- 14. huggingface.co

- 14.1. pip packages

- 14.2. main projects

- 14.3. reduce inference

- 14.4. transformers

- 14.5. accelerate - DISTRIBUTED

- 14.6. PEFT - DISTRIBUTED

- 14.7. TRL

- 14.8. Spaces

- 14.9. cache and offline mode

- 14.10. Main concepts

- 14.11. problems:

- 14.12. pip install gradio_client

- 14.13. sci-libs/huggingface_hub

- 14.14. autotrain

- 14.15. links

- 15. OLD deploy tf keras

- 16. deeppavlov lections

- 17. passport

- 18. captcha

- 19. kaggle

- 20. ИИ в банках

- 21. MLOps and ModelOps (Machine Learning Operations)

- 21.1. terms

- 21.2. DevOps strategies

- 21.3. CRISP-ML. The ML Lifecycle Process.

- 21.4. Challenges with the ML Process:

- 21.5. implemetation steps:

- 21.6. pipeline services or workflow management software (WMS)

- 21.7. tasks and tools

- 21.8. principles

- 21.9. standard

- 21.10. TFX - Tensorflow Extended

- 21.11. TODO Kubeflow

- 21.12. TODO MLFlow

- 21.13. TODO Airflow

- 21.14. TODO - mlmodel service

- 21.15. TODO continuous training

- 21.16. TODO Feature attribution or feature importance

- 21.17. links

- 22. Automated machine learning (AutoML)

- 23. Big Data

- 24. hard questions

- 25. cloud, clusters

- 26. Data Roles - Data team

- 27. ML Scientists

- 28. pyannote - audio

- 29. AI Coding Assistants

- 30. Generative AI articles

- 31. Miracle webinars

- 32. semi-supervised learning or week supervision

- 33. Mojo - language

- 34. интересные AI проекты

- 35. nuancesprog.ru

- 36. NEXT LEVEL

- 37. sobes, собеседование

- 38. articles

- 39. hardware

- 40. TODO Model compression - smaller

- 41. TODO fusion operator optimization

-- mode: Org; fill-column: 110; coding: utf-8; --

Overwhelming topics https://en.wikipedia.org/wiki/List_of_numerical_analysis_topics

Similar text categorization problems (word vectors, sentence vectors) https://stackoverflow.com/questions/64739194/similar-text-categorization-problems-word-vectors-sentence-vectors

blog of one bustard https://github.com/senarvi/senarvi.github.io/tree/master/_posts

1. best links

- Sachin Date Master of Science, research direcotor, India https://timeseriesreasoning.com

- https://paperswithcode.com/methods/category/autoregressive-transformers

news:

hackatons, news:

97 Things Every Data Engineer Should Know https://books.google.ru/books?id=ZTQzEAAAQBAJ&pg=PT19&hl=ru&source=gbs_selected_pages&cad=2#v=onepage&q&f=false

best statistic blog https://www.youtube.com/@statisticsninja

Papers without pay https://sci-hub.st/

CV Neural networks in sports https://www.youtube.com/channel/UCHuEgvSdCWXBLAUvR516P1w

1.1. papers

1.2. youtube

2021 Deep Learning https://www.youtube.com/playlist?list=PL_iWQOsE6TfVmKkQHucjPAoRtIJYt8a5A

2. most frequent math methods

- 3/2 = math.exp(-math.log(2/3))

- to log: log(value+1)

- from log: exp(value) - 1

- oldrange:0-240, new:0-100 => MinMaxScaling = (((OldValue - OldMin) * NewRange) / OldRange) + NewMin => x*100 // 240

- Percentage = (Part / Total) * 100

2.1. layout resolution

- x/y = 2

- x*y = 440

- y = sqrt(440 / 2)

- x = 440 / x

2.2. model size in memory

in bf16, every parameter uses 2 bytes (in fp32 4 bytes) in addition to 8 bytes used, e.g., in the Adam optimizer https://huggingface.co/docs/transformers/perf_train_gpu_one#optimizer

- 7B parameter model would use (2+8)*7B=70GB

- (2+8)*7*10**9/1024/1024/1024

2.3. compare two objects by features

We cannot if we don't know max and min values of features. But if we know, that min value is 0 and all max of features in the same distance from max:

import numpy as np row1 = {'SPEAKER_00': 21.667442, 'SPEAKER_00_fuzz': 100} row2 = {'SPEAKER_01': 7.7048755, 'SPEAKER_01_fuzz': 741} a = np.array([[row1['SPEAKER_00'], row1['SPEAKER_00_fuzz']], [row2['SPEAKER_01'], row2['SPEAKER_01_fuzz']] ] ) print((a.max(axis=0) - 0)) a = a/ (a.max(axis=0) - 0) print(a) if np.sum(a[0] - a[1]) > 0: print('SPEAKER_00 has greater value') else: print('SPEAKER_01 has greater value')

2.4. distance matrix

2.4.1. calc

two forms:

- distance array

- (distvec = pdist(x))

- square form

- (squareform(distvec))

from scipy.spatial.distance import pdist from scipy.spatial.distance import squareform import numpy as np print(" --------- distance array:") def cal(x, y): print((x- y)[0]) return(x- y)[0] ar = np.array([[2, 0, 2], [2, 2, 3], [-2, 4, 5], [0, 1, 9], [2, 2, 4]]) distvec = pdist(ar, metric = cal) print() print(distvec) print() print(" --------- square form:") sqf = squareform(distvec) print(sqf) print()

--------- distance array: 0 4 2 0 4 2 0 -2 -4 -2 [ 0. 4. 2. 0. 4. 2. 0. -2. -4. -2.] --------- square form: [[ 0. 0. 4. 2. 0.] [ 0. 0. 4. 2. 0.] [ 4. 4. 0. -2. -4.] [ 2. 2. -2. 0. -2.] [ 0. 0. -4. -2. 0.]]

--------- distance array: [2 0 2] [2 2 3] [2 0 2] [-2 4 5] [2 0 2] [0 1 9] [2 0 2] [2 2 4] [2 2 3] [-2 4 5] [2 2 3] [0 1 9] [2 2 3] [2 2 4] [-2 4 5] [0 1 9] [-2 4 5] [2 2 4] [0 1 9] [2 2 4] [1. 1. 1. 1. 1. 1. 1. 1. 1. 1.] --------- square form: [[0. 1. 1. 1. 1.] [1. 0. 1. 1. 1.] [1. 1. 0. 1. 1.] [1. 1. 1. 0. 1.] [1. 1. 1. 1. 0.]]

2.4.2. find lowest/max

import numpy as np np.fill_diagonal(sqf, np.inf) print("sqf\n", sqf) # closest_points = sqf.argmin(keepdims=False) # indexes along axis=0 # print(closest_points) i, j = np.where(sqf==sqf.min()) i, j = i[0], j[0] print("result indexes:", i, j) print("result:\n\t", ar[i], "\n\t", ar[j])

sqf [[inf 0. 4. 2. 0.] [ 0. inf 4. 2. 0.] [ 4. 4. inf -2. -4.] [ 2. 2. -2. inf -2.] [ 0. 0. -4. -2. inf]] result indexes: 2 4 result: [-2 4 5] [2 2 4]

2.4.3. faster

def matrix_rand_score(a, b): correl = np.zeros((len(a), len(b)), dtype=float) for i, ac in enumerate(a): for j, bc in enumerate(b): if i > j: continue c = ac+bc print(i,j, c) correl[i, j] = c return correl v = matrix_rand_score([1,2,3,4], [6,7,8,9]) print(v)

0 0 7 0 1 8 0 2 9 0 3 10 1 1 9 1 2 10 1 3 11 2 2 11 2 3 12 3 3 13 [[ 7. 8. 9. 10.] [ 0. 9. 10. 11.] [ 0. 0. 11. 12.] [ 0. 0. 0. 13.]]

2.5. interpolation

PolynomialFeatures - polynomial regression

- create Vandermonde matrix

[[1, x_0, x_0 ** 2, x_0 ** 3, ..., x_0 ** degree]

- in: y = ß0 + ß1*x + ß2*x2 + … + ßn*xn we trying to find B0, B1, B2 … Bn with linear regression

import matplotlib.pyplot as plt from sklearn.preprocessing import PolynomialFeatures import numpy as np from sklearn.linear_model import Ridge def interpol(x,y, xn): poly = PolynomialFeatures(degree=4, include_bias=False) ridge = Ridge(alpha=0.006) x_appr = np.linspace(x[0], xn, num=15) x = np.array(x).reshape(-1,1) # -- train x_poly = poly.fit_transform(x) ridge.fit(np.array(x_poly), y) # train # -- test x_appr_poly = poly.fit_transform(x_appr.reshape(-1,1)) y_pred = ridge.predict(x_appr_poly) # test # -- plot train plt.scatter(x, y) # -- plot test plt.plot(x_appr, y_pred) plt.scatter(x_appr[-1], y_pred[-1]) plt.ylabel("time in minutes") plt.title("interpolation of result for 25 max: "+ str(round(y[-1], 2))) # plt.savefig('./autoimgs/result_appr.png') plt.show() plt.close() return y_pred[-1] x = [5,15,20] y = [10,1260, 12175] # result yn = interpol(x,y,xn) print(yn)

42166.34032715159

https://scikit-learn.org/stable/auto_examples/linear_model/plot_polynomial_interpolation.html

3. common terms

- feature [ˈfiːʧə]

- explanatory variable in statistic or property of observation or juct column

- (no term)

- observation

- sample

- selected observations

- sampling

- is a selection of a subset to estimate charactersitics of the whole

- variance [ˈve(ə)rɪəns]

- дисперсия, разброс, результат переобучения

- bias [ˈbaɪəs]

- смещение, результат недообучения

- pipeline [ˈpaɪplaɪn]

- поэтапный процесс МЛ, используется для параметризации всего процесса

- layer [ˈleɪə]

- structure has input and output, part of NN

- (no term)

- weight [weɪt]

- (no term)

- end-to-end Deep Learning process -

- (no term)

- State-of-the-Art (SOTA) models

- data ingesion

- [ɪn'hiːʒən] - more broader term than ETL, is the process of connecting a wide variety of data structures into where it needs to be in a given required format and quality. to get data into any systems (storage and/or applications) that require data in a particular structure or format for operational use of the data downstream.

- Stochastic

- the property of being well described by a random probability distribution

- latent space or latent feature space or embedding space

abstract multi-dimensional space containing feature values that we cannot interpret directly, but which encodes a meaningful internal representation of externally observed events.

- in math: is an embedding of a set of items within a manifold in which items resembling each other are

positioned closer to one another in the latent space

- model selection

- task of choosing the best algorithm and settings of it's parameters

- stratification

- class percentage maintained for both training and validation sets

- Degrees of freedom (df)

- is the number of values in the final calculation of a statistic that are free to vary. количество «свободных» величин, необходимых для того, чтобы полностью определить вектор. может быть не только натуральным, но и любым действительным числом.

- Среднеквадратическое отклонение, Standard deviation

- square root of the variance

- :: √( ∑(deviations of each data point from the mean) / n)

- Statistical inference

- is a collection of methods that deal with drawing conclusions from data that are prone to random variation.

- derivative test

- if function is differentiable, for finding maxima.

- Probability distribution

- probabilities of occurrence

- independent and identically distributed i.i.d., iid, or IID

- criteria that features tell something new every and was collected together that is why telling about same object y.

4. rare terms

- residual [rɪˈzɪdjʊəl]

- differences between observed and predicted values of data

- error term

- statistical error or disturbance [dɪsˈtɜːbəns] + e

- Type I error

- (false positive) более критична чем 2-го рода

- Type II error

- (false negative) понятия задач проверки статистических гипотез

- fold

- equal sized subsamples in cross-validation

- terms of reference

- техническое задание

- neuron's receptive field

- each neuron receives input from only a restricted area of the previous layer

- Adversarial machine learning

- where an attacker inputs data into a machine learning model with the aim to cause mistakes.

- Coefficient of determination R^2

- Его рассматривают как универсальную меру зависимости одной случайной величины от множества других. Это доля дисперсии зависимой переменной, объясняемая рассматриваемой моделью зависимости, то есть объясняющими переменными. is the proportion of the variation in the dependent variable that is predictable from the independent variable(s). Con: есть свойство, что чем больше количество независимых переменных, тем большим он становится, вносят ли дополнительные «объясняющие переменные» вклад в «объяснительную силу».

- Adjusted coefficient of determination

- fix con.

- shrinkage [ˈSHriNGkij]

- method of reduction in the effects of sampling variation.

- skewness [ˈskjuːnɪs]

- a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. positive - left, negative - right. 0 - no skew

- Kurtosis [kəˈtəʊsɪs]

- measure of the "tailedness" of the probability distribution (like skewness, but for peak). 0 -

- Information content, self-information, surprisal, Shannon information

- alternative way of expressing probability, quantifying the level of "surprise" of a particular outcome. odds or log-odds

5. TODO problems classification

- ranking - ранжирование - Information retrieval (IR) -

- relevance score s = f(x), x=(q,d), q is a query, d is a document

Metric learning

- clusterization

- Dimensionality reduction снижение размерности

NLP:

- Text classifiction

- Word representation learning

- Machine translation

- NER (Named-Entity Recognizing) - classify named entities (also seeks to locate)

- Information extraction

- Nature Language generation

- Dialogue system

- Delation Learning & Knowledge Graphs

- Sentiment and Emotion Analysis (sarcasm, thwarting) - classifies of emotions (positive, negative and neutral)

- speech emotion recognition (SER)

- speech recognition, automatic speech recognition (ASR)

- Named entity recognition

- Topic modelling - descover the abstract "topic"

- topic segmentation

- speaker diarization - structuring an audio stream into speaker turns

- speaker segmentation - finding speaker change points in an audio stream

- speaker clustering - grouping together speech segments on the basis of speaker characteristics

- Voice activity detection (VAD) is the task of detecting speech regions in a given audio stream or recording.

- Semantic Role Labeling (automatically identify actors and actions)

- Word Sense Disambiguation - Identifies which sense of a word is used in a sentence

- Keyword spotting (or word spotting) or Keyword Extraction - find instance in large data without fully recognition.

- Speech-to-text

- Text-to-speech

- relationship extraction

- Question answering

- Summarisation

- speaker diarization - structuring an audio stream into speaker turns

Audio & Speack

- ASR automatic speech recognition or Audio recognition

- Keyword Spotting

- Sound Event Detection

- Speech Generation

- Text-to-text

- Human-fall detection

Computer Vision:

- Image classification

- Object detection - detecting instances of semantic objects of a certain class (such as humans, buildings, or cars)

- Image segmentation or Semantic Segmentation - to regions, something that is more meaningful and easier to analyze

- Image generation

- Image retrival

- Video classification

- Scene graph prediction

- localization

- Gaze/Depth Estimation

- Fine-grained recognition

- person re-identification

- Semantic indexing

- Object Tracking

- video generation

- video prediction

- video object segmentation

- video detection

- with NLP: Image captioning, Visual Qustion Answering

Data Analysis

- Data Regression

- Anomaly/Error

- Detection…

Reinforcement Learning & Robotic

- imitation learning

- Robot manipulation

- Locomotion

- Policy Learning

- Tabular's MDPs

- Visual Navigation

Other Fields

- Drug discovery

- Disease Prediciton

- Biometrical recognition

- Precision Agriculture

- Internet Security

5.1. Classification problem and types

- binary classification (two target classes)

- multi-class classification

- definition:

- more than two exclusive targets

- each sample can belong to only one class

- one softmax loss for all possible classes.

- definition:

- multi-label classification

- definition:

- more than two non exclusive targets

- inputs x to binary vectors y (assigning a value of 0 or 1 for each element (label) in y)

- definition:

- multi-class signle-label classification (more than two non exclusive targets) in which multiple target classes can be on

at the same time

- One logistic regression loss for each possible class

- binary: [0], [1] … n -> binary cross entropy

- multi-class: [0100], [0001] … n -> categorical cross entropy

- multi-label: [0101], [1110] … n -> binary cross entropy

multiclass problem is broken down into a series of binary problems using either

- One-vs-One (OVO)

- One-vs-Rest (OVR also called One-vs-All) OVO presents computational drawbacks, so professionals prefer the OVR approach.

Averaging techniques for metrics:

- macro - compute the metric independently for each class and then take the average - treating all classes equally

- weighted - weighted average for classes (score*num_occur_per_class)/totalnum

- micro - aggregate the contributions of all classes to compute the average metric - micro-average is preferable if you suspect there might be class imbalance

5.2. links

6. Data Analysis [ə'nælɪsɪs]

not analises

- Открытый курс https://habr.com/en/company/ods/blog/327250/

- Выявление скрытых зависимостей https://habr.com/en/post/339250/

- example https://www.kaggle.com/startupsci/titanic-data-science-solutions

- USA National institute of standards and technology (old) https://www.itl.nist.gov/div898/handbook/index.htm

Cпециалисты по анализу данных Обычно перед ними ставят задачи, которые нуждаются в уточнении формулировки, выборе метрики качества и протокола тестирования итоговой модели. Cводить задачу заказчика к формальной постановке задачи машинного обучения. Проверять качество построенной модели на исторических данных и в онлайн-эксперименте.

- анализ текста и информационный поиск

- коллаборативная фильтрация и рекомендательные системы

- бизнес-аналитика

- прогнозирование временных рядов

6.1. TODO open-source tools

FreeViz Orange 3 - exploring for teaching PSPP - free alternative for IBM SPSS Statistics - statistical analysis in social science Weka - data analysis and predictive modeling Massive Online Analysis (MOA) - large scale mining of data streams

6.2. dictionary

- intrinsic dimension - for a data set - the number of variables needed in a minimal representation of the data

- density -

- variance - мера разброса значений случайной величины относительно её математического ожидания math#MissingReference

6.3. Steps

6.3.1. стандарт CRISP-DM или Cross-Industry Standard Process for Data Mining/Data Science

методология CRISP-DM https://en.wikipedia.org/wiki/Cross-industry_standard_process_for_data_mining

2002, 2004, 2007, and 2014 show that it was the leading methodology used by industry data miners

steps:

- Business Understanding

- Data Understanding (EDA) - see steps in ./math#MissingReference

- Data Preparation

- select data

- clean data: missing data, data errors, coding inconsistences, bad metadata

- construct data: derived attrigutes, replaced missing values

- integrate date: merge data

- format data

- Modeling

- select modeling technique

- Generate Test desing: how we will test, select performance metrics

- Build Model

- Assess Model

- Reframe Setting

- Evalution

- Deployment

6.3.2. ASUM-DM Analytics Solutions Unified Method for Data Mining/Predictive Analytics 2015

https://developer.ibm.com/articles/architectural-thinking-in-the-wild-west-of-data-science/#asum-dm

- 2019 Model development process https://arxiv.org/pdf/1907.04461.pdf

- IBM Data and Analytics Reference Architecture

6.3.3. Процесс разработки

методологией разработки (моделью процесса разработки) - четкие шаги

- Водопадная методология (Waterfall model, «Водопад»)

- Установлены чёткие сроки окончания каждого из этапов.

- Готовый продукт передаётся заказчику только один раз в конце проекта

- где

- отсутствует неопределённость в требованиях заказчика

- в проектах, которые сопровождаются высокими затратами в случае провала: тщательным отслеживанием каждого из этапов и уменьшением риска допустить ошибку

- cons: слишком фиксирован, нельзя вернуться

- Гибкая методология (Agile)

- cons:

- не понятно как распределить шаги

- циклы могут затягиваться - долго перебирают модели или подстраивают параметры

- Документирование не регламентировано. В DS-проектах документация и история всех используемых моделей очень важна, позволяет экономить время и облегчает возможность вернуться к изначальному решению.

- cons:

- CIRSP-DM

- проект состоит из спринтов

- Последовательность этапов строго не определена, некоторые этапы можно менять местами. Возможна параллельность этапов (например, подготовка данных и их исследования могут вестись одновременно). Предусмотрены возвраты на предыдущие этапы.

- Фиксирование ключевых моментов проекта: графиков, найденных закономерностей, результатов проверки гипотез, используемых моделей и полученных метрик на каждой итерации цикла разработки.

6.3.4. Descriptive analytics

- Проверка на нормальность - что гистограмма похожа на нормальное распределение(критерий стьюдента требует)

print(df.describe()) # Find correlations print(applicants.corr()) # матрица корреляции # scatter matrix Матрица рассеивания - гистограммы from pandas.plotting import scatter_matrix print(scatter_matrix(df))

6.3.5. Анализ временных рядов -

- https://habr.com/en/post/207160/

- https://machinelearningmastery.com/feature-selection-time-series-forecasting-python/

- https://towardsdatascience.com/time-series-in-python-part-2-dealing-with-seasonal-data-397a65b74051

- Количество записей в месяц

df['birthdate'].groupby([df.birthdate.dt.year, df.birthdate.dt.month]).agg('count')

- по x - yt, по у - yt+1

- в соседние месяцы - если много на диагонали - значения продаж в соседние месяцы похожи

- по x - yt, по у - yt+2

- x- yt одного месяца (сумма), y - yt другого года того же месяца

Auto regressive (AR) process - when yt = c+ a1*yt-1 + a2*yt-2 …

Измерение Автокорреляция

- ACF is an (complete) auto-correlation function which gives us values of auto-correlation of any series with its lagged values.

- PACF is a partial auto-correlation function.

Make Stationary - remove seasonality and trend https://machinelearningmastery.com/feature-selection-time-series-forecasting-python/

from statsmodels.graphics.tsaplots import plot_acf from matplotlib import pyplot series = read_csv('seasonally_adjusted.csv', header=None) plot_acf(series, lags = 150) # lag values along the x-axis and correlation on the y-axis between -1 and 1 plot_pacf(series) # не понять. короче, то же самое, только более короткие корреляции не мешают pyplot.show()

6.4. 2019 pro https://habr.com/ru/company/JetBrains-education/blog/438058/

https://compscicenter.ru/courses/data-mining-python/2018-spring/classes/

- математическая статистика по орлу и решке определяет симметричность монетки

- теория вероятности говорит, что у орла и решки одна вероятность и вероятность случайна

Регрессионный анализ:

- линейный - обыкновенный

- логистический

| ковариация cov | корреляция corr |

|---|---|

| линейной зависимости двух случайных величин | ковариация посчитанная для стандартизованных данных |

| не инвариантна относительно смены масштаба | инварианта |

| dot(de_mean(x),de_mean(y))/(n-1), de_mean отклон от mean | cov(X,Y)/σx*σy где σ - standard deviation |

| Лежат между -∞ и + ∞ | Лежат между -1 и +1 |

Оба измеряют только линейные отношения между двумя переменными, то есть когда коэффициент корреляции равен нулю, ковариация также равна нулю

6.4.1. Часть 1

- 1 Гистограмма

- Синонимы - строчка, объект, наблюдение

- Синонимы - стоблец, переменная, характеристика объекта, feature

Столбцы могут быть:

- количественной шкале - килограммы, секунды, доллары

- порядковой - результат бега спортсменов - 1 местов, второе, 10

- в номинальной шкале - коды или индексы чего-то

Вариационный ряд (упорядоченная выборка[1]) - полученная в результате расположения в порядке неубывания исходной последовательности независимых одинаково распределённых случайных величин. Вариационный ряд и его члены являются порядковыми статистиками.

Поря́дковые стати́стики - это упорядоченная по неубыванию выборка одинаково распределённых независимых случайных величин и её элементы, занимающие строго определенное место в ранжированной совокупности.

Квантиль Quantile - значение, которое заданная случайная величина не превышает с фиксированной вероятностью. В процентах - процентиль. «90-й процентиль массы тела у новорожденных мальчиков составляет 4 кг» - 90 % мальчиков рождаются с весом меньше, либо равным 4 кг

- First quartile - 1/4 25% - 10×(1/4) = 2.5 round up to 3 - где 10 - количество эллементов, берем 3 по возрастанию

- Second quartile 2/4 - 50%

квартиль это квантиль выраженная не в процентах а в 1/4=25 2/4=50 3/4=75

Гистограмма - количество попаданий в интервалы значений

- n_p попавших

- n_p/ (n * длинну_интервала) # площадь равна 1 - это нормирует несколько гистограм для сопоставления # приближается к плотностьи распределения при увеличении числа испытаний - которая позволяет вычислить вероятность

Kernel density estimation Ядерная оценка плотности распределения - can be ‘scott’, ‘silverman’ - задачей сглаживания данных

- 2

Ящиковые диаграммы (Ящики с усами (whiskers)) - min–Q1-–—Q3—max –>(толстая красная линия - медиана) - это упрощенная Гистограмма

- недостаток - скрывает горбы гистограммы

- непонятно сколько налюдений в выборках

Типичный город, чек, день на сервере

- убираем дни - которые выбросы

- если mean превышает Q3 75% - то это не очень естественно

- получается среднее арефметическое очень не устойчиво к выбросам, а медиана устойчива

Лог нормальное распределение - это распределение которое после логарифмирования становится нормальным

Медиана - число посередине выборки если ее упорядочить

Усеченное среднее - сортируем, удаляем по краям 5 или 25 и вычисл среднее арифметическое

Измерение отклонения данных

- выборочная дисперсия, на практике используют стандартное отклонение std - корень из дисперссии - корень возвращает размерность как и у исходных данных

- межквартильный размах

Доверительные интервалы - в каком интервале с точностью ~0.95 будет прогноз?

- ширина интервала будет опираться на стандартное отклонение std - больше std - шире интервал

Диаграммы рассеивания

feature - новые данные позволяющие решить задачу

кружек vs стобики -

- длины лучше

- углы норм

- площади хуже всего

- Кластеризация и иерархический класерный анализ

Кластеризация, она же

- распознавание образов без учителя

- стратификация

- таксономия

- автоматическая классификация

Инструменты

- иерархический класерный анализ

- метод к-средних - хорошо работает для больших наборов данных

- самоорганизующиеся карты Кохонена (SOM)

- Смесь (нормальных) распределений

Примеры

- разделить пользователей на группу

- выделить сегменты рынка

Классификация - два смысла

- распознавание - по известным классам

- кластеризация - по неизвестным классам

какой метод лучше - который удалось проинтерпритировать и проверить.

Типы кластеров

- плотные шаровые

- шаровые парообразные

- ленточные

- закручивающиеся

- один внутри другого

- иерархический класерный анализ

- Сведение задачи к геометрической - каждый объект точка

- Определение меры сходства - расстояния

- Евклидово расстояние d = sqrt((x1-y1)^2 + (x2-y2)^2)

- недостаток - различие по одной координате может определять расстояние

- Квадрат Евклидова расстояния d = (x1-y1)^2 + (x2-y2)^2

- can be used to strengthen the effect of longer distances

- does not form a metric space, as it does not satisfy the triangle inequality.

- Блок Manhettand = |x1-y1| + |x2-y2|

- достоинство - одной переменной тяжелее перевесить другие

- Евклидово расстояние d = sqrt((x1-y1)^2 + (x2-y2)^2)

Определяется ответом на вопрос - что значит объекты похожи. Начинающим: Варда, ближайшего и среднее невзвеш.

- Расстояния между кластерами https://scikit-learn.org/stable/modules/clustering.html#hierarchical-clustering

- Average linkage clustering (Среднее невзвешенное расстояние) - 3 и 4 точки - 12 расстрояний и усредняется

- плотные паровые скопления

- Cetroid Method (Центроидный метод) - растояние между центрами - не показывает если одно в другом, объем не вляет

- Complete linkage clustering (Метод дальнего соседа) - две самые далекие точки

- Single linkage clustering (Метод ближнего соседа) - две самые близкие

- ленточные

- Ward's method (Метод Варда) - хорош для k-средних

- плотные шаровые скопления

- он стремится создавать кластеры малого размера

- Average linkage clustering (Среднее невзвешенное расстояние) - 3 и 4 точки - 12 расстрояний и усредняется

Для расстояния могут быть использованы собственные формулы - мера сходства сайтов по посетителям

- Все точки кластеры

- Выбираем два ближайших кластера и объединяем

- Остался 1 кластер

Дендрограмма где остановиться - Дерево (5-100 записей)

- пронумерованные кластеры на одном расстоянии на прямой горизонтальной

- вертикальные линии - расстояние между кластерами в момент объединения

- горизонтальная - момент объединения

Scree plot каменистая осыпь / локоть - определить число кластеров - остановиться на изломе

- вертикаль - distance

горизонталь - номер слияния на равных расстояниях

Участие аналитика (насколько субъективна)

- отбор переменных

- метод стандартизации

- в основном два варианта - 0-1 или mean=0 std = 1

- расстояние между кластерами

- расстояние между объектами

- Если кластеров нет, поцедура их все равно найдет

Проблема ленточных кластеров

- решение - Метод ближайшего соседа

Недостаток иерархического анализа - хранить в оперативной памяти матрицу попарных расстояний

- невозможность работы с гиганскими наборами данных

- Метод k-means (k-средних)

Используется только евклидова метрика, другие метрики в k-медоиды

- Задается К число кластеров и k-точек начальных кластеров

- TODO 9 Прогнозирование линейно регрессией

Прогнозирование

- есть ли тренд?

- есть ли сезонность?

- аддитивная - поправки не меняются от величины f = f+ g(t)

- мультипликативные - величина добавки зависит - выступают как множители f = f*g(x)

- Меняет ли ряд свой характер.

- выбросы -резкие отклонения

- отбросить

- заменять на разумные значения

Эмпирические правила

- Если у вас меньше данных чем за 3 периода сезонных отклонений.

- Если у вас больше чем за 5 сезонных отклонений, то самые ранние данные скорее всего устарели.

Сезонная декомпозиция - ???

Пример аддитивной модели yt = a + bt + ct^2 + g(t) + εt

- a + bt + ct^2 - тренд

- εt - ошибка для каждого момента времени

- не подходит для мультипликативной сезонности

Логирифм - произведение превращает в сумму

- трюк: данные предварительно логарифмировать log(yt) = bxi+c(xi) + ε

- потенциировать - взять экспоненту и получим прогнозы для исходного ряда

Лучше не брать базой сезонов пиковый месяц

- 10

линейная регрессия - плохая

- 3 сезонности может

- в случае коротких временных рядов

- когда сезонности не меняются

у - номинальная шкала

- количестванная шкала (метры рубли)- регрессия

- порядковая

У - количественная

- Безопасный путь - считать что У номинальная, опасный но экономный количественный - регрессия

регрессия - weak learner

sklearn.tree.DecisionTreeClassificator - когда Y номинальной шкале

CART (Classification And Regression Tree) - и задачу распознавания и задачу регрессии решать

- используется в комбинации деревьев

- можем понять как она устроена и чему-то у них научиться

- быстро работает

Impurity Загрязнение - чтобы если толко крестики = 1 только 0 =1, а если 1/2 крестиков и 1/2 ноликов = 1/2. Варианты:

- entropy H1 = -∑pj*log2(pj)

- Gini index H2 = 1-∑pj^2=∑pj*(1-pj)

- classification error H3 = 1 - max(pj), где pj - вероятность принадлежать к классу j. на практике - доля объектов класса j в узле

Для каждой колонки перебираем пороговые значения и выбираем тот столбец с которым стало чище

Увеличение частоты узлов (насколько лучше стало после расщепления) (информативность переменных):

- ΔH = H_родителя - ( (n_левый/ n_родителя)*H_левый + (n_правый/ n_родителя)*H_правый)

- n_левый - кол-во наблюдений в левом узле

- n_родителя - кол-во наблюдений в родителе

- H_левый - загрязнение в левом потомке

- H_родителя - загрязнение которое было в родительском узле

accuracy на обучающем 90% на тестовом 72% - переобучение

- TODO 11 Random Forеst, Feature selection

sklearn.tree.DecisionTreeRegressor - когда Y в количественной шкале

- лучше линейной регрессии когда у вас нелинейная зависимость ( изогнутая линия)

prune - обрезание деревьев

Деревья годятся как кирпичек

From weak to strong alg:

- stacking(5%) - X -> [Y] -> Y предсказывает основываясь на предсказаниях (предикторы)

- bagging (bootstrap aggregation) - average

- 6.19.5

Random forest - конечное решение

- 2d array, N - число строк, M - число столбцов

- случайным образом выбираем подмножество строк и столбцов - каждое дерево обучается на своем подмножестве - решает проблему декорреляции

- могут переобучаться - регулируя максимальную глубину

Параметры:

- число деревьев - сделай много, потом сокращай!

Проблемы

- декареляции - сли две выборки оказались похожи друг на друга и на выходе одно и то же - а внешне

модель сложная

- несбалансированная выборки - классы в разных пропорциях

Информативность столбцов c помощью случайных лесов:

- сложением информативностей по каждому дереву

- сравнивая out-of-bag error - берем столбце shuffle и пропускаем через дерево

Несбалансированность классов - когда 1-единичек меньше 0-ей

- решение - повторить единички

- лучшее решение - учеличить цену ошибки для 1 . class_weight = {0:.1, 1:.9} - If the class_weight doesn't sum to 1, it will basically change the regularization parameter.

6.4.2. Часть 2

- 4 Прогнозирование NN

1 … 12 -> 13 2 … 13 -> 14 3 … 14 -> 15

после 8, 12 наблюдения - уже не достоверно - накапливается ошибка

Чтобы это побороть тренируется две сети предсказывающие:

- одна на 1 месяц вперед

- вторая на 2 месяца вперед

В тестовую выборку нужно выбирать последние наблюдения!

- linear - регрессия

- logistic - 2 класса

- softmax - k классов

Как выделить мультипликативную сезонность? вариант

- разбиваем на окна сезонов

- скользящее среднее

- сумма сезонных поправок / кол-во наблюдений в окне = присутствует в каждом наблюдении сглаженного ряда

- исходный ряд - сглаженный = сезонные поправки

- 8 Факторный анализ

Факторный анализ реинкарнировался в SVD разложение - и стал полезным для рекомендательных систем

Задачи

- Cокращение числа переменных

- входных на новые искуственные - факторы

- Измерение неизмеримого. Построение новых обобщенных показателей.

- может оказаться, что факты измеряют исследуемую характеристику

- исходные переменные отбирались так, чтобы косвенно имерить неизмеряемую величину

- Наглядное представление многомерных наблюдений (проецирование данных)

- Описание структуры взаимных связей между переменными, в частности выявление групп взаимозависимых переменных.

- Преодоление мультиколинеарности переменных в регрессионном анализе. Будут все ортогональны-независимы.

Коллинеарность - Если переменные линейно зависимы - то регрессионный анализ сбоит - обратную матрицу не найти - или она плохо обусловлена - маленькие изменения в обращаемой матрице приводят к большим изменениям в обращенной - что не хорошо.

Коэффициент корреляции близок к 1

- Cокращение числа переменных

- 7 XGBoost

Tianqi Chen

Extreme Gradient Boosting

- 9

Выявление структуры зависимости в данных:

- метод корреляционных плеяд - устарел

- факторный анализ - представляет модель структуры зависимости между переменных - матрица корреляции

- Метод главных компонент - principal component analysis (PCA) (он фактически когда SVD)

- Факторный анализ который был придуман познее - пытается воспроизвести с меьшим количеством факторов матрицу корреляции

Факторный анализ вписывается в целый подход - поиск наилучших проекций

Методы проецирования:

- Projection pursuit

- Многомерное шкалирование

- Карты Sommer'a

1 0.8 0.001 0.8 1 0.001 0.01 0.01 1 Способы:

- Если проекция целевой переменной бимодальна - то это хорошо

- В многомерном пространстве прокладываем ось в направлении максимального расброса данных - это дает сокращение размерности данных

Анализ главных компонент

- Пусть X1,X2,X3.. - cслучайный вектор

- Задача1 Найти Y=a11*X1 + a12 * X2 + … такую что D(Y) дисперсия максимальна. Y - фактор

- тогда если все axx умножить на ? то дисперсия умножиться на ? поэтому вводится дополнительное ограничение

- a1 * a1T =1 or a1^2+a1^2 + a1^2… = 1

- следующие Y - то же самое, но с новым условие corr(Y1,Y2) = 0

R - матрица ковариаций(корреляций) случайного вектора X. Задача сводится к:

- R*a = λ*a

- D(Yi)= λ

Способы завершения :

- ∑ λ / колво первоначальных столбцов

- отбрасываем λ у которых дисперсия меньше 1 или меньше 0.8

- каменная осыль/ локоть

Факторный анализ который факторный анализ

- X1,X2 … - наблюдаемые переменные

- F1,F2 … - факторы ( factors, common factors) - кол-во меньше чем X

- Xi = ai1*F+ai2*F2 ….

- X = A*F + U, U = U1, U2 - то что не удалось объяснить факторами

- чем меньше дисперсия U тем лучше

from pandas.plotting import scatter_matrix scatter_matrix(df)

Факторый анализ хорошо работает когда многие переменные коррелируют

По умолчанию работает матрица ковариации поэтому - Нужно не забыть стандартизировать.

from sklearn import preprocessing scaled = preprocessing.StandardScaler().fit_transform(df) df_scaled = pd.DataFrame(scaled, columns = df.columns)

sklearn.decomposition.PCA - Linear dimensionality reduction using Singular Value Decomposition of the data to project it to a lower dimensional space. The input data is centered but not scaled for each feature before applying the SVD.

pca = PCA(n_components = 3) pca.fit(df_scaled) # pca... analys here res = pca.transfrom(df_scaled)

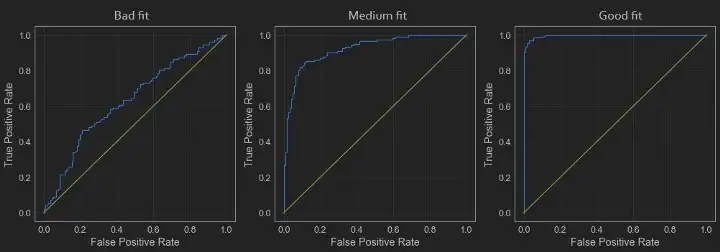

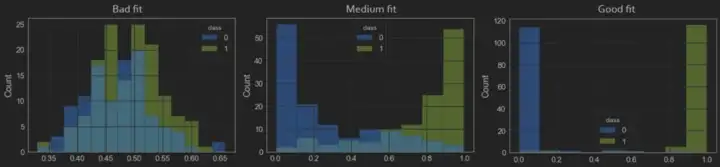

- 11 Калибровка классификаторов

Выход классификатора это не вероятность, а ранжировка - с какой вероятностью есть неизвестная вероятность этого класса

Калибровка это поиск вероятности для ранжировки - лучше всего на выборке валидации

calibration plot https://changhsinlee.com/python-calibration-plot/

- Разбиваен на bins

- x - bins, y - proportion of true outcomes

Чем больше волатильность - тем больше сомнений в качестве модели

Убрать волатильность

- isotonic регрессия

- platt метор - найти в классе логистических прямых ту, которая апроксимирует

Клссификация с нескольким количеством классов сводится к двум классам : первый против всех остальных, второй против всех остальных и тд

- 12 Логистическая регрессия logistic or logit regression (binary regression)

Логистическая функция от линейной комбинации - она же найрон - сеть это зависимо обучаемые ЛР c нелинейными функциями активации.

Для задачи распознавания (y 0 1)

В настоящий момент может быть лучше только в:

y = a0 + ∑a1*X , y - вероятность

конкуренты - отличаются активацией 1/(1+e^-x)

- линейная

- пробит регрессия

- логит регрессия

- Poisson regression

- other

распознавание классификация инструменты

- наивный байесовский классификатор

- дискриминантный анализ

- деревья классификации

- к-го ближайшего соседа

- нейронная сеть прямого распространения

- SVM

- Случайные леса

- Gradient boosting machine

https://www.youtube.com/watch?v=VRAn1f6cUJ8

Каменистая осыпь/локоть

- code

# 11111111111111111 import pandas as pd AH = pd.read_csv('a.csv', header=0, index_col = False) print(AH.head()) # header print(df.columns) # названия столбцов print(AH.shape()) print(AH.dtypes) # типы столбцов print(AH.describe(inclide='all') # pre column: unique, mean, std, min, квантиль # Ищем аномалии! AH['SalePrice'].hist(bins = 60, normed=1); from scipy.stats.kde import gaussian_kde from numpy import linespace my_density = gaussian_kde(AH['SalePrice']) # x = linespace(min(AH['SalePrice']), max(AH['SalePrice']), 1) plot(x, my_density(x), 'g') # green line # смотрим на площади!ч # позволяет найти выбросы - отстающие пинечки # может быть нормальным распределением # 2222222222222222222222 AH.groupby('MS Zoning')['SalePrices'].plot.hist(alpha=0.6) # несколько гистограмм на одной - НЕВАРНО - НУЖНО нормировать plt.legend() # И все равно не радует! # используем Ящиковую диаграмму ax = AH.boxplot(column='SalePrice', by='MΖ Zoning') print(AH['MΖ Zoning'].value_counts()) # сколько налюдений в каждой из выборок # диаганаль - сглаженная гистограмма, x, y - Colone, Coltwo #Определили самые различающиеся переменные df = pandas.read_csv(...) from pandas.plotting import scatter_matrix colors=('Colone': 'green', 'Coltwo': 'red') scatter_matrix(df, # размер картинки figsize(6,6), # плотность вместо гистограммы на диагонали diagonal='kde', # цвета классов c = df['Status'].replace(colors), # степень прозрачности точек alpha=0.2) # строим по определенной переменной столбцу Diagonal две гистограммы df.groupby('Status')['Diagonal'].plot.hist(alpha=0.6, bins=10, range=[0, 500000]) plt.legend() # диаграммы рассеивания для этого же столбца df.plot.scatter(x='Top', y='Bottom', c=df['Status'].replace(colors))

6.5. EXAMPLES OF ANALYSIS

6.5.1. dobrinin links

https://habr.com/ru/post/204500/

Просто сравниваются 4 разных классификатора на 280 тыс. данных, разделенных 2/3, 1/3. И у всех очень низкий результат.

https://ai-news.ru/2018/08/pishem_skoringovuu_model_na_python.html https://sfeducation.ru/blog/quants/skoring_na_python

Обычный препроцессинг, классификатор случайный лес, кросс-валидация по AUC и Bagging ансамбль над лесом.

https://www.youtube.com/watch?v=q9I2ozvHOmQ

Реклама mlbootcamp.ru клона kaggle. Приз часы и футболка. На сайте нет почти ничего полезного.

Копия первой ссылки https://habr.com/en/post/270201/

Очень интересная статья использующая конструирование признаков и бустинге деревьев в Microsoft Azure Machine Learning студии. Без стандартных средств pandas дело не обошлось.

6.5.2. https://github.com/firmai/industry-machine-learning

Consumer Finance

- Loan Acceptance - Classification and time-series analysis for loan acceptance. ( Классический стат. анализ на выявления критичных показателй компании: бин-классификатор банкротсва SVM, Предсказание котировок ARIMA, предсказания складваются чтобы оценить рост или падение. Случайный лес бин-классификатор использется для определения важнейших показателей)

- Predict Loan Repayment - Predict whether a loan will be repaid using automated feature engineering.( реклама библиотеки Featuretools для automatic feature engeering)

- Loan Eligibility Ranking - System to help the banks check if a customer is eligible for a given loan. ( Отличаем выплаченные кредиты от не выплаченных. Препроцессинг с заменой на средние. Перцептрон, Случайный лес, дерево принятия решений для классификации. Результаты не проверяются и возможно переобучаются.)

- Home Credit Default (FirmAI) - Predict home credit default. (Фиерические финты с Pandas, классификатор LightGBM метрика AUC, сросс-валидация StratifiedKFold. Результат это средняя feature_importance по фолдам)

- Mortgage Analytics - Extensive mortgage loan analytics. (Анализ временных рядов ипотечных кредитов: проверка нулевой гипотезы, что величина является случайным блужданием; автокорреляция. Статистики: суммы; Вероятностные диаграммы; Важность по ExtraTreeClassifier; диаграммы рассеяния; матрица корреляции; уменьшение размерности методом главних компонент. Предсказание: процентной ставки, количества займов с помощью ARIMA, Linear Regression, Logistic Regression, SVM, SVR, Decision Tree, RF, k-NN. Лучшие k-NN и RandomForest.)

- Credit Approval - A system for credit card approval. ( Логистическая регрессия, много анализа, 690 записей 2/3 обучающие 1/3 тестируемая. Accuracy: 0.84 gini:0.814, что довольно мало.)

- Loan Risk - Predictive model to help to reduce charge-offs and losses of loans. (Apache Spark, H2O www.h2o.ai платформа для распределенного ML на Hadoop или Spark. Реализована AutoML)

- Amortisation Schedule (FirmAI) - Simple amortisation schedule in python for personal use. Расчет граффика погашения. Линейная и столбчатая диаграмма.

6.6. EDA Exploratory analysis

according to CRISP: distribution of key attributes, looking for errors in the data, relationships between pairs or small numbers of attributes, results of simple aggregations, properties of significant subpopulations, and simple statistical analyses

- time period

- boxplot

- historgram

- missing values

- Bivariate Exploration - impact on target: sns.violinplot

TODO https://www.kaggle.com/pavansanagapati/a-simple-tutorial-on-exploratory-data-analysis

6.6.1. types of comparison

- goodness of fit - whether an observed frequency distribution differs from a theoretical distribution.

- homogeneity - compares the distribution of counts for two or more groups using the same categorical variable

- independence - expressed in a contingency table,

degrees of freedom (df) 1) is the number of values in the final calculation of a statistic that are free to vary. 2) number of values that are free to vary as you estimate parameters. количество «свободных» величин, необходимых для того, чтобы полностью определить вектор. может быть не только натуральным, но и любым действительным числом.

- For Two Samples: df = (N1 + N2) - 2

ex: [2, 10, 11] - we estimate mean parameter, so we have: two degree

- (2 + 10 + 11)/ 3 = 7.7

- 11 = 7.7*3 - 10 - 2

6.6.2. skewness and kurtosis

import numpy as np import matplotlib.pyplot as plt from scipy.stats import kurtosis, skew # -- toy normal distribution mu, sigma = 0, 1 # mean and standard deviation x = np.random.normal(mu, sigma, 1000) # -- calc skewness and kurtosis print( 'excess kurtosis of normal distribution (should be 0): {}'.format( kurtosis(x) )) print( 'skewness of normal distribution (should be 0): {}'.format( skew(x) )) # -- plt.hist(x, density=True, bins=40) # density=False would make counts plt.ylabel('Probability') plt.xlabel('Data'); plt.show()

excess kurtosis of normal distribution (should be 0): -0.05048549574403838 skewness of normal distribution (should be 0): 0.2162053890291638

6.6.3. TODO normal distribution test

https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.normaltest.html

D’Agostino and Pearson’s test - 0 - means it is normal distribution

scipy.stats.normaltest(df['trip_duration_log'])

- statistic - s^2 + k^2, where s is the z-score returned by skewtest and k is the z-score returned by kurtosistest.

- pvalue - (p-value) A 2-sided chi squared probability for the hypothesis test. if low - there is low

probability that big statistic value is realy describe not normal distribution.

- inverse is not true, not used to provide evidence for the null hypothesis.

normal distribution - symmetrical bell curve - может быть описано функцией Гауса (Gaussian distribution)

- e^((−(x − μ)^2)/2*σ^2)/(σ*√2π)

- σ - standard devitation

Null Hypothesis - The null hypothesis is that the observed difference is due to chance alone. Нулевая гипотеза состоит в том, что наблюдаемая разница обусловлена только случайностью.

null distribution - when the null hypothesis is true. Here it is not normal distribution. for large number of samples equal to chi-squared distribution with two degrees of freedom.

import numpy as np import matplotlib.pyplot as plt from scipy.stats import normaltest # -- toy normal distribution mu, sigma = 0, 1 # mean and standard deviation x = np.random.normal(mu, sigma, 100) # -- calc skewness and kurtosis print( 'Test whether a sample differs from a normal distribution. (should be 0): {}'.format( normaltest(x) ))

Test whether a sample differs from a normal distribution. (should be 0): NormaltestResult(statistic=4.104513172099168, pvalue=0.12844472972455415)

6.6.4. Analysis for regression model:

- Linearity: assumes that the relationship between predictors and target variable is linear

- No noise: eg. that there are no outliers in the data

- No collinearity: if you have highly correlated predictors, it’s most likely your model will overfit

- Normal distribution: more reliable predictions are made if the predictors and the target variable are normally distributed

- Scale: it’s a distance-based algorithm, so preditors should be scaled — like with standard scaler

6.6.5. quartile, quantile, percentile

- Range from 0 to 100

- Quartiles: Range from 0 to 4.

- Quantiles: Range from any value to any other value.

percentiles and quartiles are simply types of quantiles

- 4-quantiles are called quartiles.

- 5-quantiles are called quintiles.

- 8-quantiles are called octiles.

- 10-quantiles are called deciles.

- 100-quantiles are called percentiles.

6.7. gradient boostings vs NN

- NN are very efficient for dealing with high dimensional raw data

- GBM can handle missing values

- GBM do not need GPU

- NN big data "the more the merrier" GBM - more - bigger error

6.8. theory

- numerical - almost all values are unique

- binary - only 2 values [red, blue, red, blue]

- categorical - has frequent values [red, red, blue, yellow, black]

ordinal or normal

6.8.1. terms

proportions - is a mathematical statement expressing equality of two ratios a/b = c/d

6.8.2. 1 column describe

- count - total count in each category of the categorical variables

- среднее - mean, median,

- mode - мультимодальность указывает на то, что набор данных не подчиняется нормальному распределению.

- для категориальных - count (например: 6, 2, 6, 6, 8, 9, 9, 9, 0; мода — 6 и 9).

- для числовых - пики гистограммы

- .groupby(['Outlet_Type']).agg(lambda x:x.value_counts().index[0]))

- .mode()

- Measures of Dispersion

- Range - max - min

- Quartiles and Interquartile (IQR) - difference between the 3rd and the 1st quartile

- Standard Deviation - tells us how much all data points deviate from the mean value

- .std()

- Skewness

- skew() - data shapes are skewed or have asymmetry different from Gaussian. it is that measure.

6.8.3. categories of analysis

- Descriptive analysis - What happened.

- It does this by ordering, manipulating, and interpreting raw data from various sources to turn it into valuable insights to your business.

- present our data in a meaningful way.

- Exploratory analysis - How to explore data relationships.

- to find connections and generate hypotheses and solutions for specific problems

- Diagnostic analysis - Why it happened.

- Predictive analysis - What will happen.

- Prescriptive analysis - How will it happen.

6.8.4. methods

- cluster analysis - grouping a set of data elements in a way that said elements are more similar

- Cohort analysis - behavioral analytics that breaks the data in a data set into related groups before analysis

- to "see patterns clearly across the life-cycle of a customer (or user), rather than slicing across all customers blindly without accounting for the natural cycle that a customer undergoes."

- Regression analysis - how a dependent variable's value is affected when one (linear regression) or more

independent variables (multiple regression) change or stay the same

- you can anticipate possible outcomes and make better business decisions in the future

- Factor analysis - dimension reduction

- Funnel analysis - analyzing a series of events that lead towards a defined goal - воронка

6.8.5. correlation

any statistical relationship between two random variables

- Pearson's product-moment coefficient

sensitive only to a linear relationship between two variables

Corr(X,Y) = cov(X,Y) / σ(X)*σ(Y) = E[(X - μx)(Y-μx)]/σ(X)*σ(Y) , if σ(X)*σ(Y) > 0, E is the expected value operator.

- Spearman's rank correlation

have been developed to be more robust than Pearson's, that is, more sensitive to nonlinear relationships

6.9. Feature Preparation

Ideally data is i.i.d. Independent and identically distributed - simplify computations.

- get information from string columns

- encoding

- scaling.

- StandardScaling если нет skew.

- Если есть skew, то clipping или log scaling или нормализация.

- Если не знаем есть Skew или нет, то MinMaxScaler.

- очень чувствителен к выбросам, поэтому их нужно обрезать

- for categorical values get

6.9.1. terms

- nominal features are categoricals with values that have no order

- binary symmetric and asymmetric attributes - man and woman, positive results in medical is more significant than a negative

- EDA - exploratory data analysis

- OHE - one-hot-encoding

transformations - preserve rank of the values along each feature

- the log of the data or any other transformation of the data that preserves the order because what matters

is which ones have the smallest distance.

- normalization - process of converting a variable's actual range of values into: -1 to +1, 0 to 1, the normal distribution

scaling - shifts the range of a label and/or feature value.

- linear scaling - combination of subtraction and division to replace the original value with a number

between -1 and +1 or between 0 and 1.

- logarithmic scaling

- Z-score normalization or standard scaling

6.9.2. Выбросы Outliers

- quantile

в sklearn различные скалирования по разному чувствительны к выбросам

q_low = df["col"].quantile(0.01) q_hi = df["col"].quantile(0.99) df_filtered = df[(df["col"] < q_hi) & (df["col"] > q_low)]

def outliers(p): df: pd.DataFrame = pd.read_pickle(p) # print(df.describe().to_string()) for c in df.columns: q_low = df[c].quantile(0.001) q_hi = df[c].quantile(0.999) df_filtered = df[(df[c] > q_hi) | (df[c] < q_low)] df.drop(df_filtered.index, inplace=True) # print(df.describe().to_string()) p = 'without_outliers.pickle' pd.to_pickle(df, p) print("ok") return p

- TODO

6.9.3. IDs encoding with embaddings

6.9.4. Categorical encode

- Replacing values

- Encoding labels - to number 0… n_categories-1 - pandas: .get_dummies(data, drop_first=True)

- One-Hot encoding - each category value into a new column and assign a 1 or 0

- Binary encoding

- Backward difference encoding

- Miscellaneous features

- MeanEncoding - A,B -> 0.7, 0.3 - mean of binary target [1,0]

Pros of MeanEncoding:

- Capture information within the label, therefore rendering more predictive features

- Creates a monotonic relationship between the variable and the target

Cons of MeanEncodig:

- It may cause over-fitting in the model.

6.9.5. отбор признаков feature filtrating

Удалять:

- коррелирующие переменные с целевой - только руками

- значение неизменно

- неважные признаки - принимают шум за сигнал, переобучаясь. Вычислительная сложность

- низковариативные признаки скорее хуже, чем высоковариативные - отсекать признаки, дисперсия которых ниже определенной границы

- если признаки явно бесполезны в простой модели, то не надо тянуть их и в более сложную.

- Exhaustive Feature Selector

Из моего опыта - для конкретной модели - лучше всего удалить:

- с низкой значимостью и коррелирующие c коррелирующие (с низкой значимостью).

6.9.6. imbalanced classes and sampling

- very infrequent features are hard to learn

6.9.7. Skewed numerical feature

- Linear Scaling x'=(x - x_min)/(x_max - x_min) - When the feature is more-or-less uniformly distributed across a fixed range.

- Clipping if x > max, then x' = max. if x < min, then x' = min - When the feature contains some extreme outliers.

- Log Scaling x' = log(x) - When the feature conforms to the power law.

- Z-Score or standard scaling - When the feature distribution does not contain extreme outliers. (as Google say)

6.9.8. missing values: NaN, None

pands: data.info() - количество непустых значения для каждого столбца

- missing flag

for feature in df.columns: if df[feature].hasnans: df["is_" + feature + "_missing"] = np.isnull(df[feature]) * 1

- Проблема выбора типичного значения

- заменить NaN на новый признак - если это отдельная группа .fillna(0)

- Одна из хороших практик учета отсутствующих данных — генерация бинарных функций. Такие функции принимают значение 0 или 1, указывающие на то, присутствует ли в записи значение признака или оно пропущено.

- усеченная средняя - сортируем и удаляем по краям

- median - data['Age'] = data.Age.fillna(data.Age.median())

- q3-q1

- sd ?

- предсказание - лучший метод

- моды - значения которые встречаются наиболее часто

Другими распространенными практиками являются следующие подходы:

- Удаление записей с отсутствующими значениями. Обычно так делается, если число недостающих значений очень мало в сравнении со всей выборкой, при этом сам факт пропуска значения имеет случайный характер. Недостатком такой стратегии является возникновение ошибок в случаях идентичных пропусков в тестовых данных.

- Подстановка среднего, медианного или наиболее распространенного значения данного признака.

- Использование различных предсказательных моделей для прогнозирования пропущенного значения при помощи остальных данных датасета.

- заменить NaN на новый признак - если это отдельная группа .fillna(0)

- scikit-learn

IterativeImputer

- autoimpute

6.9.9. numerical data to bins

there might be fluctuations in those numbers that don't reflect patterns in the data, which might be noise

Новый столбец с 4 бинами возростов [0, 1, 2, 3]:

data['CatAge'] = pd.qcut(data.Age, q=4, labels=False ) data = data.drop(['Age', 'Fare'], axis=1) # удаляем оригинальыне столбцы

simple map

df['KIDSDRIV'] = df['KIDSDRIV'].map({0:0,1:1,2:2,3:2,4:2})

разложить в бины:

df['HOMEKIDS']= pd.cut(df['HOMEKIDS'], bins=[0,1,2,3,4,10], labels=[0,1,2,3,4], include_lowest=True, right=True).astype(float)

6.9.10. Sparse Classes

categorical features) are those that have very few total observations.

- переобучение модели

1 большой класс и тыща супер маленьких - объединяем маленькие в большие или просто в "Others"

6.9.11. Feature engeering

Сильно зависит от модели - разные модели могут синтезировать разные операции

- линейные модели - суммы столбцов создают мультиколлинеарность что мешает

- neural network легко синтезирует +,-,*,counts, diff, power, rational polynominal ( bad ratio and

clusterization as a source of new features

- Why?

Например два вида точек в полярных координатах и в прямоугольной системе координат

- если получается круг - то тяжелее

Когда граница пролигает по операции которую модели тяжело синтезировать

- https://arxiv.org/pdf/1701.07852.pdf

- Counts ?

- Differences (diff) = x1-x2

- Logarithns (log) = log(x)

- Polynomials (poly) = 1 + 5x + 8x^2

- Powers (pow) = x^2

- Ratios = y = x1/x2

- Rational Differences (ratio_diff) y = (x1-x2)/(x3-x4)

- Rational Polynomials y = 1/(5x + 8x^2)

- Root Distance ?

- sqiare roots = sqrt(x)

- quadratic equation (quad) = y = |((-b + sqrt(b^2-4ac))/2a - (-b - sqrt(b^2-4ac))/2a)

- Heaton https://towardsdatascience.com/importance-of-feature-engineering-methods-73e4c41ae5a3

NN fail at synthesizing

- ratio_diff

- ratio

- quad - ?

- log - ?

Random Forest

- ratio_diff

- quad

- count

BDT Gradient Boosted Decision Trees

- ratio_diff

- ratio

- counts

- quad

- Time Series

- https://machinelearningmastery.com/basic-feature-engineering-time-series-data-python/

- https://www.analyticsvidhya.com/blog/2019/12/6-powerful-feature-engineering-techniques-time-series/

- parts of date

- quarter, type of year

- logical indicator - first/last day of …

- Lag features. t-1 target value = lag . lag_1 = NaN, 1,2,3, 8…

- Rolling window - statistic based on past values - with static window size

- Expanding window feature - all past values into account

- external dataset - holidays, weather

lag correlations:

from statsmodels.graphics.tsaplots import plot_acf plot_acf(data['Count'], lags=10) plot_pacf(data['Count'], lags=10)

- tools

- featuretools

- jyputer https://github.com/brynmwangy/Beginner-Guide-to-Automated-Feature-Engineering-With-Deep-Feature-Synthesis./blob/master/Automated_Feature_Engineering.ipynb

- article https://medium.com/@rrfd/simple-automatic-feature-engineering-using-featuretools-in-python-for-classification-b1308040e183

- https://www.kaggle.com/willkoehrsen/automated-feature-engineering-basics/notebook

- doc https://docs.featuretools.com/en/stable/generated/featuretools.dfs.html#featuretools.dfs

- TODO Informationsfabrik

- TODO TPOT

- tsfresh - time sequence

- ATgfe

- featuretools

- on featuretools

- by hands

- ratio

- (A*c)/B = (A/B)*c

- (A +/- c)/B = A/B +/- c/B - the lerge c, B will have more value in ratio

- if A and B has + and - values: then A/B will sort by values with same sign and they with different.

- if A has + and - but B has only - or +, then ratio will be clearly separated for + and - of A

- if A has + and - but B has only - or +, then you can not use (-A)/B

6.9.12. Standardization, Rescale, Normalization

- https://scikit-learn.org/0.22/modules/preprocessing.html

- https://scikit-learn.org/0.22/auto_examples/preprocessing/plot_all_scaling.html

- https://en.wikipedia.org/wiki/Feature_scaling

- comparizion https://scikit-learn.org/stable/auto_examples/preprocessing/plot_all_scaling.html#sphx-glr-auto-examples-preprocessing-plot-all-scaling-py

- terms

- Scale

- generally means to change the range of the values

- Standardize

- generally means changing the values so that the distribution’s standard deviation equals one. Scaling is often implied.

- Normalize (Google)

- working with skew -scaling to a range, clipping, log scaling, z-score

- Bucketing

- reduce rare categorical

- Out of Vocab (OOV)

- new category for aglomerate rare categories

- StandardScaler - Standardize features

Centering and scaling.

- (x-mean(x))/std(x), where x is a column

If a feature has a variance that is orders of magnitude larger than others, it might dominate the objective function and make the estimator unable to learn from other features correctly as expected.

very sensitive to the presence of outliers.

/std - it change feature importance a + b = v, do not change distribution of data -mean - do not change distribution of data. Important for PCA.

Standardization and Its Effects on K-Means Clustering Algorithm https://www.semanticscholar.org/paper/Standardization-and-Its-Effects-on-K-Means-Mohamad-Usman/1d352dd5f030589ecfe8910ab1cc0dd320bf600d?p2df

- required by:

- Gaussian with 0 mean and unit variance

- objective function of a learning algorithm (such as the RBF kernel of Support Vector Machines or the L1 and

L2 regularizers of linear models)

- Deep learning algorithms often call for zero mean and unit variance.

- Regression-type algorithms also benefit from normally distributed data with small sample sizes.

- MinMaxScaler

- range [0, 1]

transformation:

- X_std = (X - X.min(axis=0)) / (X.max(axis=0) - X.min(axis=0))

- X_scaled = X_std * (max - min) + min

very sensitive to the presence of outliers.

- MaxAbsScaler

If only positive values are present, the range is [0, 1]. If only negative values are present, the range is [-1, 0]. If both negative and positive values are present, the range is [-1, 1]

also suffers from the presence of large outliers.

- RobustScaler

- [-1, 1] + outliers

transforms the feature vector by subtracting the median and then dividing by the interquartile range (75% value — 25% value).

centering and scaling statistics are based on percentiles and are therefore not influenced by a small number of very large marginal outliers.

- TODO PowerTransformer, QuantileTransformer (uniform output)

- Normalization

norm - функция расстояния

- Mean normalization ( mean removal) - (-1;1)

- data = (np.array(data) - np.mean(data)) / (max(data) - min(data))

- Normaliztion l1 l2 (sklearn)

works on the rows, not the columns!

By default, L2 normalization is applied to each observation so the that the values in a row have a unit norm. Unit norm with L2 means that if each element were squared and summed, the total would equal 1.

sklearn.preprocessing.normalize()

- l1 - each element - ∑|x|, sum = 1

- used with - latent semantic analysis (LSA)

- Mean normalization ( mean removal) - (-1;1)

- Standardization (Z-score Normalization) mean removal and variance scaling (0:1)

transform the data to center and scale it by dividing non-constant features - получить нулевое матожидание(mean) и единичную дисперсию(np.std)

- mean = 0 print(np.nanmean(data, axis=0))

- std = 1 print(np.nanstd(data, axis=0))

- for line XNormed = (X - X.mean())/(X.std())

- for table XNormed = (X - X.mean(axis=0))/(X.std(axis=0))

- for table rest = (data - np.nanmean(data, axis=0))/ np.nanstd(data, axis=0)

- maintains useful information about outliers - less sensitive to them

- отнять среднне сначала или разделить - нет разницы

- numpy array with nan

from sklearn import preprocessing df = preprocessing.StandardScaler().fit_transform(df)

- DataFrame saved with float

df /= np.nanstd(df, axis=0) df -= np.nanmean(df, axis=0)

print(df) print(df.describe()) print(df.dtypes) print(df.isna().sum().sum())

if the dataset does not have a normal or more or less normal distribution for some feature, the z-score may not be the most suitable method.

- Scaling features to a range or min-max scaling or min-max normalization

- x_norm = (x - x_min)/(x_max - x_min)

6.9.13. feature selection (correlation)

Multicollinearity - one predictor variable in a multiple regression model can be perfectly predicted from the others

tech for structural risk minimization to remove redundant or irrelevant data from input

- detection

detecting multicollinearity:

- The analysis exhibits the signs of multicollinearity — such as, estimates of the coefficients vary excessively from model to model.

- The t-tests for each of the individual slopes are non-significant (P > 0.05), but the overall F-test for testing all of the slopes are simultaneously 0 is significant (P < 0.05).

- The correlations among pairs of predictor variables are large.

It is possible that the pairwise correlations are small, and yet a linear dependence exists among three or even more variables.

continuous categorical continuous Pearson LDA categorical Anova Chi-Square - Pearson's correlation (feature selection) is very popular for determining the relevance of all independent variables, relative to the target variable (dependent variable).

- LDA: Linear discriminant analysis is used to find a linear combination of features that characterizes or separates two or more classes (or levels) of a categorical variable.

- ANOVA: ANOVA stands for Analysis of variance. It is similar to LDA except for the fact that it is operated using one or more categorical independent features and one continuous dependent feature. It provides a statistical test of whether the means of several groups are equal or not.

- Chi-Square: It is a is a statistical test applied to the groups of categorical features to evaluate the likelihood of correlation or association between them using their frequency distribution.

- questionable cause / causal fallacy / false cause

non causa pro causa ("non-cause for cause" in Latin)