Table of Contents

- 1. most common structures

- 2. tools 2022 pypi

- 2.1. web frameworks

- 2.2. additional libraries

- 2.3. machine learning frameworks

- 2.4. cloud platforms do you use? *This question is required.

- 2.5. ORM(s) do you use together with Python, if any? *This question is required.

- 2.6. Big Data tool(s) do you use, if any? *This question is required.

- 2.7. Continuous Integration (CI) system(s) do you regularly use? *This question is required.

- 2.8. configuration management tools do you use, if any? *This question is required.

- 2.9. documentation tool do you use? *This question is required.

- 2.10. IDE features

- 2.11. isolate Python environments between projects? *This question is required.

- 2.12. tools related to Python packaging do you use directly? *This question is required.

- 2.13. application dependency management? *This question is required.

- 2.14. automated services to update the versions of application dependencies? *This question is required.

- 2.15. installing packages? *This question is required.

- 2.16. tool(s) do you use to develop Python applications? *This question is required.

- 2.17. job role(s)? *This question is required.

- 3. install

- 4. Python theory

- 5. scripting

- 6. Data model

- 7. typed varibles or type hints

- 8. Strings

- 9. Classes

- 10. modules and packages

- 11. folders/files USECASES

- 12. functions

- 13. asterisk(*)

- 14. with

- 15. Operators and control structures

- 16. Traverse or iteration over containers

- 17. The Language Reference

- 17.1. yield and generator expression

- 17.2. yield from

- 17.3. ex

- 17.4. function decorator

- 17.5. class decorator

- 17.6. lines

- 17.7. Indentation

- 17.8. identifier [aɪˈdentɪfaɪər] or names

- 17.9. Keywords Exactly as written here:

- 17.10. Numeric literals

- 17.11. Docstring and comments

- 17.12. Simple statements

- 17.13. open external

- 18. The Python Standard Library

- 19. exceptions handling

- 20. Logging

- 21. Collections

- 22. Conventions

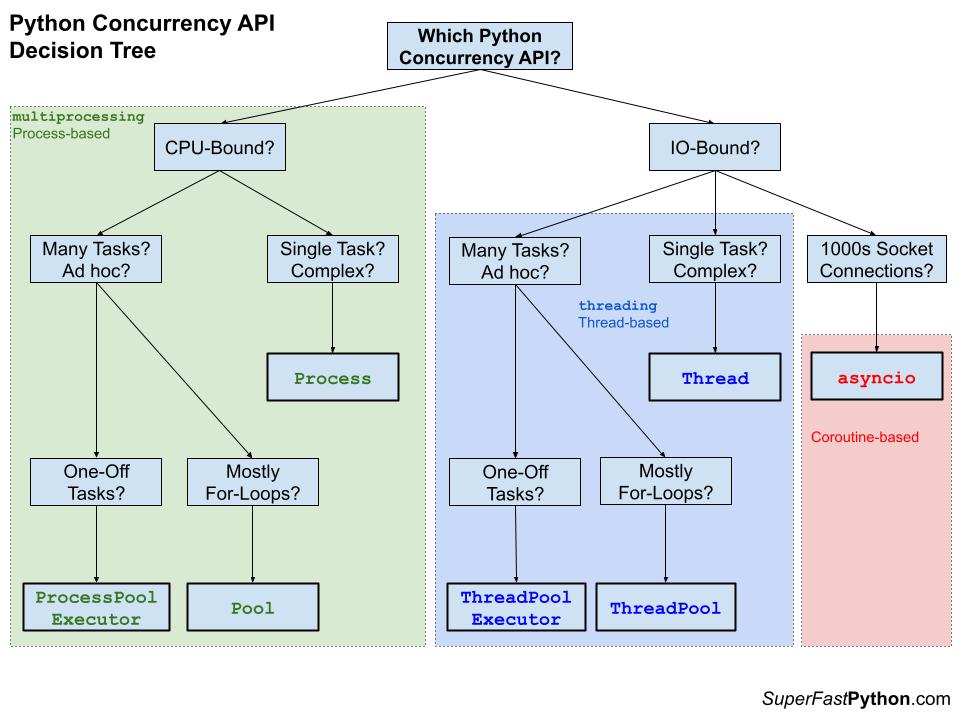

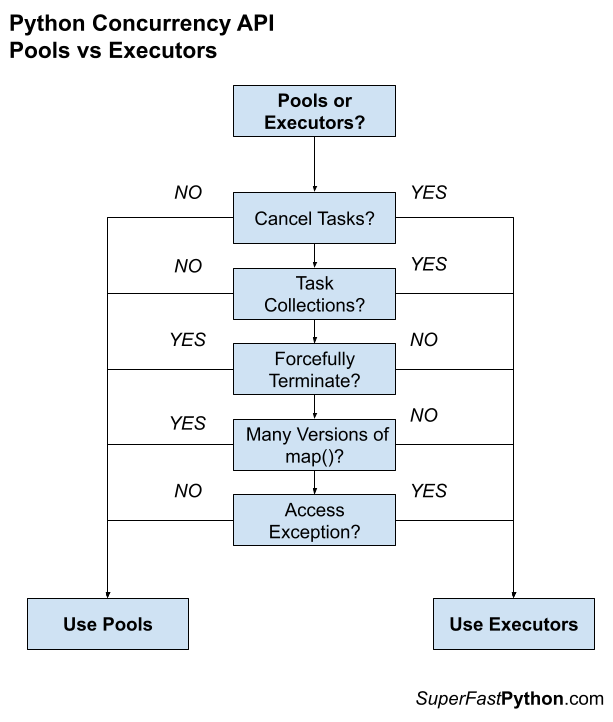

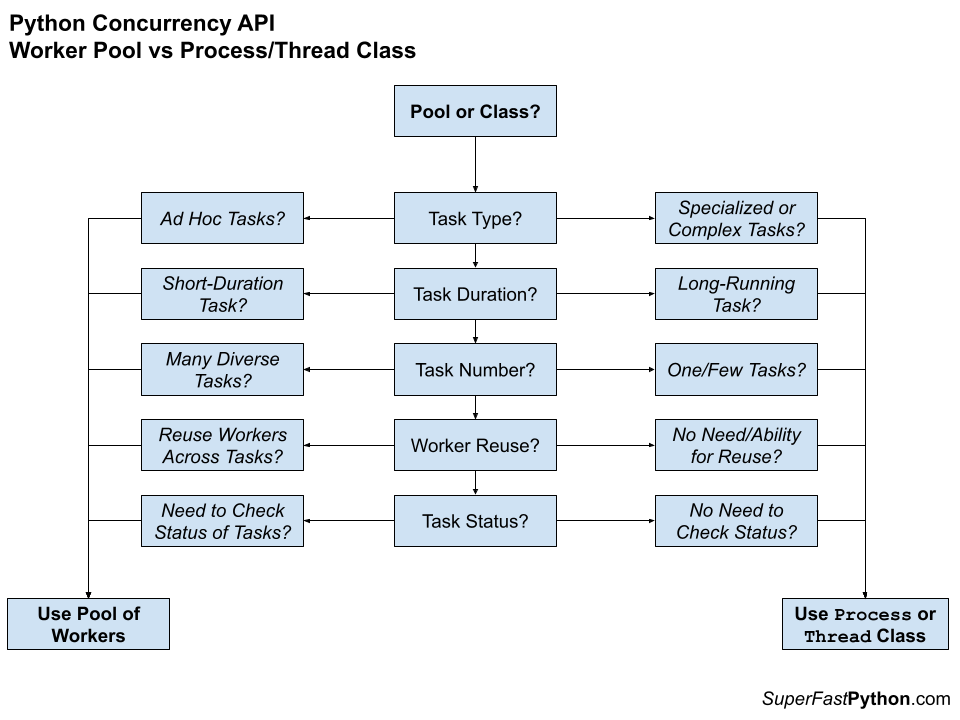

- 23. Concurrency

- 24. Monkey patch (modification at runtile)

- 25. Performance Tips

- 26. decorators

- 27. Assert

- 28. Debugging and Profiling

- 29. inject

- 30. BUILD and PACKAGING

- 30.1. build tools:

- 30.2. toml format for pyproject.toml

- 30.3. pyproject.toml

- 30.4. build

- 30.5. distutils (old)

- 30.6. terms

- 30.7. recommended

- 30.8. Upload to the package distribution service

- 30.9. editable installs PEP660

- 30.10. PyPi project name, name normalization and other specifications

- 30.11. TODO src layout vs flat layout

- 30.12. links

- 31. setuptools - build system

- 32. pip (package manager)

- 33. urllib3 and requests library

- 34. pdf 2 png

- 35. statsmodels

- 36. XGBoost

- 37. Natasha & Yargy

- 38. Stanford NER - Java

- 39. DeepPavlov

- 40. AllenNLP

- 41. spaCy

- 42. fastText

- 43. TODO rusvectores

- 44. Natural Language Toolkit (NLTK)

- 45. pymorphy2

- 46. linux NLP

- 47. fuzzysearch

- 48. Audio - librosa

- 49. Audio

- 50. Whisper

- 50.1. Byte-Pair Encoding (BPE)

- 50.2. model.transcribe(filepath or numpy)

- 50.3. model.decode(mel, options)

- 50.4. no_speech_prob and avg_logprob

- 50.5. decode from whisper_word_level 844

- 50.6. main_loop

- 50.7. words timestemps https://github.com/jianfch/stable-ts

- 50.8. confidence score

- 50.9. TODO main/notebooks

- 50.10. links

- 51. NER USΕ CASES

- 52. Flax and Jax

- 53. hyperparemeter optimization library test-tube

- 54. Keras

- 54.1. install

- 54.2. API types

- 54.3. Sequential model

- 54.4. functional API

- 54.5. Layers

- 54.6. Models

- 54.7. Accuracy:

- 54.8. input shape & text prepare

- 54.9. ValueError: Error when checking input: expected input_1 to have 3 dimensions, but got array with shape

- 54.10. merge inputs

- 54.11. convolution

- 54.12. character CNN

- 54.13. Early stopping

- 54.14. plot history

- 54.15. ImageDataGenerator class

- 54.16. CNN Rotate

- 54.17. LSTM

- 55. Tesseract - Optical Character Recognition

- 56. FEATURE ENGEERING

- 57. support libraries

- 58. Microsoft nni AutoML framework (stupid shut)

- 59. transformers - provides pretrained models

- 60. help

- 61. IDE

- 61.1. EPL

- 61.2. PyDev is a Python IDE for Eclipse

- 61.3. Emacs

- 61.4. PyCharm

- 61.5. ipython

- 61.6. geany

- 61.7. BlueFish

- 61.8. Eric

- 61.9. Google Colab

- 61.9.1. TODO todo

- 61.9.2. initial config

- 61.9.3. keys (checked):

- 61.9.4. keys in Internet (emacs IPython console)

- 61.9.5. Google Colab Magics

- 61.9.6. install libraries and system commands

- 61.9.7. execute code from google drive

- 61.9.8. shell

- 61.9.9. gcloud

- 61.9.10. gcloud ssh (require billing)

- 61.9.11. api

- 61.9.12. upload and download files

- 61.9.13. connect ssh (restricted)

- 61.9.14. connect ssh (unrestricted)

- 61.9.15. Restrictions

- 61.9.16. cons

- 62. Jupyter Notebook

- 63. USΕ CASES

- 63.1. NET

- 63.2. LISTS

- 63.2.1. all has one value

- 63.2.2. 2D list to 1D dict or list

- 63.2.3. list to string

- 63.2.4. replace one with two

- 63.2.5. remove elements

- 63.2.6. average

- 63.2.7. [1, -2, 3, -4, 5]

- 63.2.8. ZIP массивов с разной длинной

- 63.2.9. Shuffle two lists

- 63.2.10. list of dictionaries

- 63.2.11. closest in list

- 63.2.12. TIMΕ SEQUENCE

- 63.2.13. split list in chunks

- 63.3. FILES

- 63.4. STRINGS

- 63.5. DICT

- 63.6. argparse: command line arguments

- 63.7. way to terminate

- 63.8. JSON

- 63.9. NN EQUAL QUANTITY FROM SAMPLES

- 63.10. most common ellement

- 63.11. print numpers

- 63.12. SCALE

- 63.13. smoth

- 63.14. one-hot encoding

- 63.15. binary encoding

- 63.16. map encoding

- 63.17. Accuracy

- 63.18. garbage collect

- 63.19. Class loop for member varibles

- 63.20. filter special characters

- 63.21. measure time

- 63.22. primes in interval

- 63.23. unicode characters in interval

- 64. Flask

- 64.1. terms

- 64.2. components

- 64.3. static files and debugging console

- 64.4. start, run

- 64.5. Quart

- 64.6. GET

- 64.7. app.route

- 64.8. gentoo dependencies

- 64.9. blueprints

- 64.10. Hello world

- 64.11. curl

- 64.12. response object

- 64.13. request object

- 64.14. Jinja templates

- 64.15. security

- 64.16. my projects

- 64.17. Flask-2.2.2 hashes

- 64.18. flask-restful

- 64.19. example

- 64.20. swagger

- 64.21. werkzeug

- 64.22. debug

- 64.23. test

- 64.24. production

- 64.25. vulnerabilities

- 64.26. USECASES

- 64.27. async/await and ASGI

- 64.28. use HTTPS

- 64.29. links

- 65. FastAPI

- 66. Databases

- 67. Virtualenv

- 68. ldap

- 69. Containerized development

- 70. security

- 71. serialization

- 72. cython

- 73. headles browsers

- 74. selenium

- 75. plot in terminal

- 76. xml parsing

- 77. pytest

- 78. static analysis tools:

- 79. release as execuable - Pyinstaller

- 80. troubleshooting

-- mode: Org; fill-column: 110; coding: utf-8; -- #+TITLE Python my notes

- build in functions https://docs.python.org/3/library/functions.html

- pypi https://pypi.org/

- https://www.tutorialspoint.com/python3/python_modules.htm

- doc https://docs.python.org/3/contents.html

- https://docs.python.org/3/index.html

- software https://github.com/vinta/awesome-python

TODO from os import environ as env env.get('MYSQL_PASSWORD')

1. most common structures

1.1. sliced windows

from itertools import islice def window(seq, n=2): "Returns a sliding window (of width n) over data from the iterable" " s -> (s0,s1,...s[n-1]), (s1,s2,...,sn), ... " it = iter(seq) result = tuple(islice(it, n)) if len(result) == n: yield result for elem in it: result = result[1:] + (elem,) yield result # or seq = [0, 1, 2, 3, 4, 5] window_size = 3 for i in range(len(seq) - window_size + 1): print(seq[i: i + window_size])

1.2. compare row to itself

import numpy as np a = [0,1,2,3,4,5,6,7,8,9] r = np.zeros((len(a),len(a))) for x in a: for y in a: if y<x: continue # we skip y! r[x,y] = x+y print(r)

[[ 0. 1. 2. 3. 4. 5. 6. 7. 8. 9.] [ 0. 2. 3. 4. 5. 6. 7. 8. 9. 10.] [ 0. 0. 4. 5. 6. 7. 8. 9. 10. 11.] [ 0. 0. 0. 6. 7. 8. 9. 10. 11. 12.] [ 0. 0. 0. 0. 8. 9. 10. 11. 12. 13.] [ 0. 0. 0. 0. 0. 10. 11. 12. 13. 14.] [ 0. 0. 0. 0. 0. 0. 12. 13. 14. 15.] [ 0. 0. 0. 0. 0. 0. 0. 14. 15. 16.] [ 0. 0. 0. 0. 0. 0. 0. 0. 16. 17.] [ 0. 0. 0. 0. 0. 0. 0. 0. 0. 18.]]

2. tools 2022 pypi

2.1. web frameworks

- Bottle

- CherryPy

- Django

- Falcon

- FastAPI

- Flask

- Hug

- Pyramid

- Tornado

- web2py

2.2. additional libraries

- aiohttp

- Asyncio

- httpx

- Pillow

- Pygame

- PyGTK

- PyQT

- Requests

- Six

- Tkinter

- Twisted

- Kivy

- wxPython

- Scrapy

2.3. machine learning frameworks

- Gensim

- MXNet

- NLTK

- Theano

2.4. cloud platforms do you use? *This question is required.

- AWS

- Rackspace

- Linode

- OpenShift

- PythonAnywhere

- Heroku

- Microsoft Azure

- DigitalOcean

- Google Cloud Platform

- OpenStack

2.5. ORM(s) do you use together with Python, if any? *This question is required.

- No database development

- Tortoise ORM

- Dejavu

- Peewee

- SQLAlchemy

- Django ORM

- PonyORM

- Raw SQL

- SQLObject

2.6. Big Data tool(s) do you use, if any? *This question is required.

- None

- Apache Samza

- Apache Kafka

- Dask

- Apache Beam

- Apache Hive

- Apache Hadoop/MapReduce

- Apache Spark

- Apache Tez

- Apache Flink

- ClickHouse

2.7. Continuous Integration (CI) system(s) do you regularly use? *This question is required.

- CruiseControl

- Gitlab CI

- Travis CI

- TeamCity

- Bitbucket Pipelines

- AppVeyor

- GitHub Actions

- Jenkins / Hudson

- CircleCI

- Bamboo

2.8. configuration management tools do you use, if any? *This question is required.

- None

- Chef

- Puppet

- Custom solution

- Ansible

- Salt

2.9. documentation tool do you use? *This question is required.

- I don’t use any documentation tools

- Sphinx

- MKDocs

- Doxygen

2.10. IDE features

- use Version Control Systems use Version Control Systems: Often use Version Control Systems: From timeto time use Version Control Systems: Never orAlmost never

- use Issue Trackers use Issue Trackers: Often use Issue Trackers: From timeto time use Issue Trackers: Never orAlmost never

- use code coverage use code coverage: Often use code coverage: From timeto time use code coverage: Never orAlmost never

- use code linting (programs that analyze code for potential errors) use code linting (programs that analyze code for potential errors): Often use code linting (programs that analyze code for potential errors): From timeto time use code linting (programs that analyze code for potential errors): Never orAlmost never

- use Continuous Integration tools use Continuous Integration tools: Often use Continuous Integration tools: From timeto time use Continuous Integration tools: Never orAlmost never

- use optional type hinting use optional type hinting: Often use optional type hinting: From timeto time use optional type hinting: Never orAlmost never

- use NoSQL databases use NoSQL databases: Often use NoSQL databases: From timeto time use NoSQL databases: Never orAlmost never

- use autocompletion in your editor use autocompletion in your editor: Often use autocompletion in your editor: From timeto time use autocompletion in your editor: Never orAlmost never

- run / debug or edit code on remote machines (remote hosts, VMs, etc.) run / debug or edit code on remote machines (remote hosts, VMs, etc.): Often run / debug or edit code on remote machines (remote hosts, VMs, etc.): From timeto time run / debug or edit code on remote machines (remote hosts, VMs, etc.): Never orAlmost never

- use SQL databases use SQL databases : Often use SQL databases : From timeto time use SQL databases : Never orAlmost never

- use a Python profiler use a Python profiler: Often use a Python profiler: From timeto time use a Python profiler: Never orAlmost never

- use Python virtual environments for your projects use Python virtual environments for your projects: Often use Python virtual environments for your projects: From timeto time use Python virtual environments for your projects: Never orAlmost never

- use a debugger use a debugger: Often use a debugger: From timeto time use a debugger: Never orAlmost never

- write tests for your code write tests for your code: Often write tests for your code: From timeto time write tests for your code: Never orAlmost never

- refactor your code refactor your code: Often refactor your code: From timeto time refactor your code: Never orAlmost never

2.11. isolate Python environments between projects? *This question is required.

- virtualenv

- venv

- virtualenvwrapper

- hatch

- Poetry

- pipenv

- Conda

2.12. tools related to Python packaging do you use directly? *This question is required.

- pip

- Conda

- pipenv

- Poetry

- venv (standard library)

- virtualenv

- flit

- tox

- PDM

- twine

- Containers (eg: via Docker)

- Virtual machines

- Workplace specific proprietary solution

2.13. application dependency management? *This question is required.

- None

- pipenv

- poetry

- pip-tools

2.14. automated services to update the versions of application dependencies? *This question is required.

- None

- Dependabot

- PyUp

- Custom tools, e.g. a cron job or scheduled CI task

- No, my application dependencies are updated manually

2.15. installing packages? *This question is required.

- None

- pip

- easy_install

- Conda

- Poetry

- pip-sync

- pipx

2.16. tool(s) do you use to develop Python applications? *This question is required.

- None / I'm not sure

- Setuptools

- build

- Wheel

- Enscons

- pex

- Flit

- Poetry

- conda-build

- maturin

- PDM-PEP517

2.17. job role(s)? *This question is required.

- Architect

- QA engineer

- Business analyst

- DBA

- CIO / CEO / CTO

- Technical support

- Technical writer

- Team lead

- Systems analyst

- Data analyst

- Product manager

- Developer / Programmer

3. install

pip3 install –upgrade pip –user

3.1. change Python version Ubuntu & Debian

update-alternatives –install /usr/bin/python python /usr/bin/python3.8 1 echo 1 | update-alternatives –config python

4. Python theory

4.1. Python [ˈpʌɪθ(ə)n] паисэн

- interpreted

- code readability

- indentation instead of curly braces

- designed to be highly extensible

- garbage collector

- functions are first class citizens

- multiple inheritance

- all parameters (arguments) are passed by reference

- nothing in Python makes it possible to enforce data hiding

- all classes inherit from object

Multi-paradigm:

- imperative

- procedural

- object-oriented

- functional (in the Lisp tradition) - (itertools and functools) - borrowed from Haskell and Standard ML

- reflective

- aspect-oriented programming by metaprogramming[42] and metaobjects (magic methods)

- dynamic name resolution (late binding) ?????????

Typing discipline:

- Duck

- dynamic

- gradual (since 3.5) - mey be defined with type(static) or not(dynamic).

- strong

Python and CPython are managed by the non-profit Python Software Foundation.

The Python Standard Library 3.6

- string processing (regular expressions, Unicode, calculating differences between files)

- Internet protocols (HTTP, FTP, SMTP, XML-RPC, POP, IMAP, CGI programming)

- software engineering (unit testing, logging, profiling, parsing Python code)

- operating system interfaces (system calls, filesystems, TCP/IP sockets)

4.2. philosophy

document Zen of Python (PEP 20)

- Beautiful is better than ugly

- Explicit is better than implicit

- Simple is better than complex

- Complex is better than complicated

- Readability counts

- Errors should never pass silently. Unless explicitly silenced.

- There should be one– and preferably only one –obvious way to do it.

- If the implementation is hard to explain, it's a bad idea. If the implementation is easy to explain, it may be a good idea.

- Namespaces are one honking great idea – let's do more of those!

Other

- "there should be one—and preferably only one—obvious way to do it"

- goal - keeping it fun to use ( spam and eggs instead of the standard foo and bar)

- pythonic - related to style (code is pythonic )

- Pythonists, Pythonistas, and Pythoneers - питонутые

4.3. History

Every revision of Python enjoys performance improvements over the previous version.

- 1989

- 2000 - Python 2.0 - cycle-detecting garbage collector and support for Unicode

- 2008 - Python 3.0 - not completely backward-compatible - include the 2to3 utility, which automates (at least partially) the translation of Python 2 code to Python 3.

- 2009 Python 3.1 ordered dictionaries,

- 2015 Python 3.5 typed varibles

- 2016 Python 3.6 asyncio, Formatted string literals (f-strings), Syntax for variable annotations.

- PEP523 API to make frame evaluation pluggable at the C level.

3.7

- built-in breakpoint() function that calls pdb. before was: import pdb; pdb.set_trace()

- @dataclass - class annotations shugar

- contextvars module - mechanism for managing Thread-local context variables, similar to thread-local storage (TLS), PEP 550

- from dataclasses import dataclass @dataclass - comes with basic functionality already implemented: instantiate, print, and compare data class instances

3.8

- Positional-Only Parameter: pow(x, y, z=None, /)

- Assignment Expressions: if (match := pattern.search(data)) is not None: - This feature allows developers to assign values to variables within an expression.

- f"{a=}", f"Square has area of {(area := length**2)} perimeter of {(perimeter := length*4)}"

- new SyntaxWarnings: when to choose is over ==, miss a comma in a list

3.9

- Merge (|) and update (|=) added to dict library to compliment dict.update() method and {**d1, **d2}.

- Added str.removeprefix(prefix) and str.removesuffix(suffix) to easily remove unneeded sections of a string.

- More Flexible Decorators: Traditionally, a decorator has had to be a named, callable object, usually a

function or a class. PEP 614 allows decorators to be any callable expression.

- before: decorator: '@' dotted_name [ '(' [arglist] ')' ] NEWLINE

- after: decorator: '@' namedexpr_test NEWLINE

- typehints: list[int] do not require import typing;

- Annotated[int, ctype("char")] - integer that should be considered as a char type in C.

- Better time zones handling.

- The new parser based on PEG was introduced, making it easier to add new syntax to the language.

3.10

- Structural pattern matching (PEP 634) was added, providing a way to match against and destructure data structures.

- match command.split(): case [action, obj]: # interpret action, obj

- The new Parenthesized context managers syntax (PEP 618) was introduced, making it easier to write context managers using less boilerplate code.

- Improved error messages and error recovery were added to the parser, making it easier to debug syntax errors.

- Parenthesized Context Managers: This feature improves the readability of with statements by allowing developers to use parentheses. with (open("test_file1.txt", "w") as test, open("test_file2.txt", "w") as test2):

3.11

- The built-in pip package installer was upgraded to version 21.0, providing new features and improvements to package management.

- Improved error messages and error handling were added to the interpreter, making it easier to understand and recover from runtime errors.

- Some of the built-in modules were updated and improved, including the asyncio and typing modules.

- Better hash randomization: This improves the security of Python by making it more difficult for attackers to exploit hash-based algorithms that are used for various operations such as dictionary lookups.

- package has been deprecated

3.12

- distutils removed

- allow perf - linux profiler, new API for profilers, sys.monitoring

- buffer protocol - access to the raw region of memory

- type-hits:

- TypedDict - source of types. for typing **kwargs

- doesn't need to import TypeVar. func[T] syntax to indicate generic type references

- @override decorator can be used to flag methods that override methods in a parent

concurrency preparing:

- Immortal objects - to implement other optimizations (like avoiding copy-on-write)

- subinterpreters - the ability to have multiple instances of an interpreter, each with its own GIL, no

end-user interface to subinterpreters.

- asyncio is larger and faster

- sqlite3 module: command-line interface has been added to the

- unittest: Add a –durations command line option, showing the N slowest test cases

4.3.1. 3.0

- Old feature removal: old-style classes, string exceptions, and implicit relative imports are no longer supported.

- exceptions now need the as keyword, exec as var

- with is now built in and no longer needs to be imported from future.

- range: xrange() from Python 2 has been replaced by range(). The original range() behavior is no longer available.

- print changed

- input

- all text content such as strings are Unicode by default

- / -> float, in 2.0 it was integer. // operator added.

- Python 2.7 cannot be translation to Python 3.

4.4. Implementations

CPython, the reference implementation of Python

- interpreter and a compiler as it compiles Python code into bytecode before interpreting it

- (GIL) problem - only one thread may be processing Python bytecode at any one time

- One thread may be waiting for a client to reply, and another may be waiting for a database query to execute, while the third thread is actually processing Python code.

- Concurrency can only be achieved with separate CPython interpreter processes managed by a multitasking operating system

implementations that are known to be compatible with a given version of the language are IronPython, Jython and PyPy.

- IronPython -C#- use JIT- targeting the .NET Framework and Mono. created here known not to work under CPython

- PyPy - just-in-time compiler. written completely in Python.

- Jython - Python in Java for the Java platform

CPython based:

- Cython - translates a Python script into C and makes direct C-level API calls into the Python interpreter

Stackless Python - a significant fork of CPython that implements microthreads; it does not use the C memory stack, thus allowing massively concurrent programs.

Numba - NumPy-aware optimizing runtime compiler for Python

MicroPython - Python for microcontrollers (runs on the pyboard and the BBC Microbit)

Jython and IronPython - do not have a GIL and so multithreaded execution for a CPU-bound python application will work. These platforms are always playing catch-up with new language features or library features, so unfortunately

Pythran, a static Python-to-C++ extension compiler for a subset of the language, mostly targeted at numerical computation. Pythran can be (and is probably best) used as an additional backend for NumPy code in Cython.

mypyc, a static Python-to-C extension compiler, based on the mypy static Python analyser. Like Cython's pure Python mode, mypyc can make use of PEP-484 type annotations to optimise code for static types. Cons: no support for low-level optimisations and typing, opinionated Python type interpretation, reduced Python compatibility and introspection after compilation

Nuitka, a static Python-to-C extension compiler.

- Pros: highly language compliant, reasonable performance gains, support for static application linking (similar to cython_freeze but with the ability to bundle library dependencies into a self-contained executable)

- Cons: no support for low-level optimisations and typing

Brython is an implementation of Python 3 for client-side web programming (in JavaScript). It provides a subset of Python 3 standard library combined with access to DOM objects. It is packaged in Gentoo as dev-python/brython.

4.5. Bytecode:

- Java is compiled into bytecode and then executed by the JVM.

- C language is compiled into object code, and then becomes the executable file after the linker

- Python is first converted to the bytecode and then executed via ceval.c. The interpreter directly executes thetranslated instruction set.

Bytecide is a set of instructions for a virtual machine which is called the Python Virtual Machine (PVM).

The PVM is an interpreter that runs the bytecode.

The bytecode is platform-independent, but PVM is specific to the target machine. .pyc file.

The bytecode files are stored in a folder named pycache. This folder is automatically created when you try to import another file that you created.

manually create it: manually create it: python -m compileall file_1.py … file_n.py

4.6. terms

binding the name to the object - x = 2 - (generic) name x receives a reference to a separate, dynamically allocated object of numeric (int) type of value 2

4.7. Indentation - Отступ слева and blank lines

Количество отступов не важно.

if True: print "Answer" // both prints called suite and header line with : - if print "True" else: print "Answer" print "False"

Blank Lines - ignored

semicolon ( ; ) allows multiple statements

Внутри:

- INDENT - token означающий начало нового блока

- DEDENT - конец блока.

4.8. mathematic

- арифметика произвольной точности длина чисел ограничена только объёмом доступной памяти

- Extensive mathematics library, and the third-party library NumPy that further extends the native capabilities

- a < b < c - support

4.9. WSGI (Web Server Gateway Interface)(whiskey)

- calling convention for web servers to forward requests to web applications or frameworks written in the Python programming language.

- like Java's "servlet" API.

- WSGI middleware components, which implement both sides of the API, typically in Python code.

5. scripting

5.1. top-level script enironment

- https://docs.python.org/3.9/library/inspect.html

- https://docs.python.org/3/library/functions.html?highlight=__file__

- https://docs.python.org/3/reference/import.html

- https://geek-university.com/python/display-module-content/

__name__ - equal to 'main' when as a script or "python -m" or from an interactive prompt. 'main' is the name of the scope in which top-level code executes.

if name == "main": - not execute when imported

__file__ - full path to module file

5.2. command line arguments parsing

import sys

print 'Number of arguments:', len(sys.argv), 'arguments.' print 'Argument List:', str(sys.argv)

getopt module for better

5.3. python executable

- -c cmd : program passed in as string (terminates option list)

- -m mod : run library module as a script (terminates option list)

- -O : remove assert and debug-dependent statements; add .opt-1 before .pyc extension; also PYTHONOPTIMIZE=x

- -OO : do -O changes and also discard docstrings; add .opt-2 before .pyc extension

- -s : don't add user site directory to sys.path; also PYTHONNOUSERSITE. Disable home/u2.local/lib/python3.8/site-packages

- -S : don't imply 'import site' on initialization

- /usr/lib/python38.zip

- /usr/lib/python3.8

- /usr/lib/python3.8/lib-dynload

5.4. current dir

script_dir=os.path.dirname(os.path.abspath(file))

5.5. unix logger

def init_logger(level, logfile_path: str = None): """ stderr WARNING ERROR and CRITICAL stdout < WARNING :param logfile_path: :param level: level for stdout :return: """ formatter = logging.Formatter('mkbsftp [%(asctime)s] %(levelname)-6s %(message)s') logger = logging.getLogger(__name__) logger.setLevel(level) # debug - lowest # log file if logfile_path is not None: h0 = logging.FileHandler(logfile_path) h0.setLevel(level) h0.setFormatter(formatter) logger.addHandler(h0) # stdout -- python3 script.py 2>/dev/null | xargs h1 = logging.StreamHandler(sys.stdout) h1.setLevel(level) # level may be changed h1.addFilter(lambda record: record.levelno < logging.WARNING) h1.setFormatter(formatter) # stderr -- python3 script.py 2>&1 >/dev/null | xargs h2 = logging.StreamHandler(sys.stderr) h2.setLevel(logging.WARNING) # fixed level h2.setFormatter(formatter) logger.addHandler(h1) logger.addHandler(h2) return logger

5.6. How does python find packages?

sys.path - Initialized from the environment variable PYTHONPATH, plus an installation-dependent default.

find module:

- import imp

- imp.find_module('numpy')

5.7. dist-packages and site-packages?

- dist-packages is a Debian-specific convention that is also present in its derivatives, like Ubuntu. Modules are installed to dist-packages when they come from the Debian package manager. This is to reduce conflict between the system Python, and any from-source Python build you might install manually.

5.8. file size and modification date

os.stat(pf).st_size os.stat(pf).st_mtime

5.9. environment

os.environ - dictionary

try … except KeyError: - no variable in dictionary

os.environ.get('FLASK_SOME_STAFF') - None if no key

if

export BBB ; python os.environ['BBB'] # KeyError

DEBUG = os.environ.get('DEBUG', False) # sed DEBUG to True of False

5.10. -m mod - run library module as a script

https://peps.python.org/pep-0338/

- __name__ is always 'main'

5.10.1. e.g. mymodule/__main__.py:

import argparse def main(): parser = argparse.ArgumentParser() parser.add_argument("-p", "--port", action="store", default="8080") parser.add_argument("--host", action="store", default="0.0.0.0") args = parser.parse_args() port = int(args.port) host = str(args.host) app.run(host=host, port=port, debug=False) return 0 if __name__=="__main__": main()

6. Data model

Five standard data types −

- Numbers

- String

- List :list - []

- Tuple :tuple - ()

- Dictionary :dict - {}

- Callable :callable

- :object

6.1. special types

https://docs.python.org/3/reference/datamodel.html

- None - a single value

- NotImplemented - Numeric methods and rich comparison methods should return this value if they do not implement the operation for the operands provided.

- Ellipsis - accessed through the literal … or the built-in name Ellipsis.

- numbers.Number

- Sequences - represent finite ordered sets indexed by non-negative numbers (len() for sequence)

- mutable: lists, Byte Arrays

- immutable: str, tuple, bytes

- Set types -

- Sets - mutable

- Frozen sets - frozenset()

- Mappings - indexet by [2:3], have del and

- Callable

- Instance methods

- Generator functions - function or method which uses the yield statement

- when called, always returns an iterator object

- Coroutine functions - async def - when called, returns a coroutine object

- Asynchronous generator functions

- Built-in functions

- Built-in methods

- Classes - factories for new instances of themselves

- Class Instances - can be made callable by defining a __call__() method in their class.

- Modules name The module’s name, doc, file - The pathname of the file from which the module was loaded,__annotations__, dict is the module’s namespace as a dictionary object.

- Custom classes -

- Class instances

6.2. theory

- everything is an object, even classes. (Von Neumann’s model of a “stored program computer”)

- object has identity, a type and a value

- identity - address in memory, never changed once created instance

- id(object) = identity

- x is y - compare identities x is not y

- type or class

- type()

- value of some objects can change - mutable immutable - even if refered object inside mutable

- numbers, strings and tuples are immutable

- dictionaries and lists are mutable

6.3. Types build-in

- None - name to access single object - to signify the absence of a value = false.

- NotImplemented - name to access single object - Numeric methods and rich comparison methods should return this value if they do not implement the operation for the operands provided. = true.

- Ellipsis - single object with name to access - … or Ellipsis = true

- numbers.Number - immutable

- numbers.Integral

- Integers (int) - unlimited range

- Booleans (bool) - 0 and 1, in most contextes "False" or "True"

- numbers.Real (float) - underlying machine architecture определеяет accepted range and handling of overflow

- numbers.Complex (complex) - z.real and z.imag - pair of machine-level double precision floating point numbers

- numbers.Integral

- Sequences - finite ordered sets len() - index a[i]: 0 to n-1; min(s), max(s) ; s * n - n copies of s ;

s + t concatenation; x in s - True if an item of s is equal to x

- Immutable sequences - list.index(obj)

- str - UTF-8 - s[0] = string with length 1(code point). ord(s) - code point to 0 - 10FFFF ; chr(i) int to integer.; str.encode() -> bytes.decode() <-

- Tuple - неизменяемый (), (1,) (1,'23') any type.

- range()

- Bytes - items are 8-bit byte = 1-255 - literal xb'ab' ; bytes() - creates;

- Mutable unhashable - del list[0] - без первого -

- List - изменяемый [1,'3'] any type.

- Byte Array - bytearray - bytearray()

- memoryview

- Immutable sequences - list.index(obj)

- Set types - unordered - finite sets of unique - immutable - compare by == ; has len()

- set - mutable - items must be imutable x in set for x in set - {'h', 'o', 'l', 'e'}

- frozenset - immutable and hashable - it can be used again as an element of another set

- Mappings - finite sets, finctions: del a[k], len()

- Dictionary - mutable - Keys are unique within a dictionary - indexed by nearly arbitrary values -_Keys must be immutable_ - {2 : 'Zara', 'Age' : 7, 'Class' : 'First'} dict[3] = "my" # Add new entry

- Callable types - to which call operation can be applied - код, который можеть быть вызван

- User-defined functions

- Instance methods: read-only attributes:

- Generator functions - function which returns a generator iterator. It looks like a normal function except that it contains yield expressions ??????

- Coroutine functions - async def - returns a coroutine object ???

- Asynchronous generator functions

- Built-in functions - len() and math.sin() (math is a standard built-in module)

- Built-in methods alist.append()

- Classes - act as factories for new instances of themselves. arguments of the call are passed to __new__()

- Class Instances - may be callable by defining a __call__() method

- Modules

- Custom classes

6.4. Truth Value Testing

false:

- None and False.

- zero of any numeric type: 0, 0.0, 0j, Decimal(0), Fraction(0, 1)

- empty sequences and collections: '', (), [], {}, set(), range(0)

6.5. Shallow and deep copy operations

- import copy

- copy.copy(x) Return a shallow copy of x.

- copy.deepcopy(x[, memo]) Return a deep copy of x.

- calss own copy: __copy__() and __deepcopy__()

6.6. links

- https://docs.python.org/3/reference/datamodel.html

- https://docs.python.org/3/library/stdtypes.html

- object by name or by link: muttable immutalbe 2019 https://realpython.com/pointers-in-python/

7. typed varibles or type hints

- https://docs.python.org/3/library/typing.html

- from typing import Dict, Tuple, Sequence, Any, Union, Tuple, Callable, TypeVar, Generic

variable_name: type

7.1. typing.Annotated and PEP-593

data models, validation, serialization, UI

v: Annotated[T, *x]

- v: a “name” (variable, function parameter, . . . )

- T: a valid type

- x: at least one metadata (or annotation), passed in a variadic way. The metadata can be used for either static analysis or at runtime.

Ignorable: When a tool or a library does not support annotations or encounters an unknown annotation it should just ignore it and treat annotated type as the underlying type.

stored in obj.__annotations__

7.1.1. from typing import get_type_hints

@dataclass

class Point:

x: int

y: Annotated[int, Label("ordinate")]

{'x': <class 'int'>, 'y': typing.Annotated[int, Label('ordinate')]}

7.1.2. Use case: A calendar Event model, using pydantic https://github.com/pydantic/pydantic

from pydantic import BaseModel class Event(BaseModel): summary: str description: str | None = None start_at: datetime | None = None end_at: datetime | None = None # -- Validation on datetime fields (using Pydantic) from pydantic import AfterValidator class Event(BaseModel): summary: str description: str | None = None start_at: Annotated[datetime | None, AfterValidator(tz_aware)] = None end_at: Annotated[datetime | None, AfterValidator(tz_aware)] = None def tz_aware(d: datetime) -> datetime: if d.tzinfo is None or d.tzinfo.utcoffset(d) is None: raise ValueError ("expecting a TZ-aware datetime") return d # -- iCalendar serialization support TZDatetime = Annotated[datetime, AfterValidator(tz_aware)] from . import ical class Event(BaseModel): summary: Annotated[str, ical.Serializer(label="summary")] description: Annotated[str | None, ical.Serializer(label="description")] = None start_at: Annotated[TZDatetime | None, ical.Serializer(label="dtstart")] = None end_at: Annotated[TZDatetime | None, ical.Serializer(label="dtend")] = None # module: ical @dataclass class Serializer: label: str def serialize(self, value: Any) -> str: if isinstance(value, datetime): value = value.astimezone(timezone.utc).strftime("%Y%m%dT%H%M%SZ") return f"{self.label.upper()}:{value}" def serialize_event(obj: Event) -> str: lines = [] for name, a, _ in get_annotations(obj, Serializer): if (value := getattr(obj, name, None)) is not None: lines.append(a.serialize(value)) return "\n".join(["BEGIN:VEVENT"] + lines + ["END:VEVENT"]) # console rendering # >>> evt = Event( # ... summary="FOSDEM", # ... start_at=datetime(2024, 2, 3, 9, 00, 0, tzinfo=ZoneInfo("Europe/Brussels")), # ... end_at=datetime(2024, 2, 4, 17, 00, 0, tzinfo=ZoneInfo("Europe/Brussels")), # ... ) # >>> print(ical.serialize_event(evt)) # BEGIN:VEVENT # SUMMARY:FOSDEM # DTSTART:20240203T080000Z # DTEND:20240204T160000Z # END:VEVENT

7.2. function annotation

def function_name(parameter1: type) -> return_type:

from typing import Dict def get_first_name(full_name: str) -> str: return full_name.split(" ")[0] fallback_name: Dict[str, str] = { "first_name": "UserFirstName", "last_name": "UserLastName" } raw_name: str = input("Please enter your name: ") first_name: str = get_first_name(raw_name) # If the user didn't type anything in, use the fallback name if not first_name: first_name = get_first_name(fallback_name) print(f"Hi, {first_name}!")

8. Strings

Quotation [kwəʊˈteɪʃn] fot string: single ('), double (") and triple (''' or """) quotes to denote string literals

8.1. основы

S = 'str'; S = "str"; S = '''str'''; para_str = """this is a long string that is made up of several lines and non-printable characters such as TAB ( \t ) and they will show up that way when displayed. NEWLINEs within the string, whether explicitly given like this within the brackets [ \n ], or just a NEWLINE within the variable assignment will also show up."""

8.1.1. multiline

- s = """My Name is Pankajin Developers community."""

- s = ('asd' 'asd') = asdasd

- backslash

s = "My Name is Pankaj. " \

"website in Developers community."

- s = ' '.join(("My Name is Pankaj. I am the owner of", "JournalDev.com and"))

8.2. A formatted string literal or f-string

equivalent to format()

- '!s' calls str() on the expression

- '!r' calls repr() on the expression

- '!a' calls ascii() on the expression.

>>> name = "Fred"

>>> f"He said his name is {name!r}." # repr() is equivalent to !r

"He said his name is 'Fred'."

Символов после запятой

>>> width = 10

>>> precision = 4

>>> value = decimal.Decimal("12.34567")

>>> f"result: {value:{width}.{precision}}" # nested fields

'result: 12.35'

Форматирование даты:

>>> today = datetime(year=2017, month=1, day=27)

>>> f"{today:%B %d, %Y}" # using date format specifier

'January 27, 2017'

>>> number = 1024

>>> f"{number:#0x}" # using integer format specifier

'0x400'

format:

>>> '{:,}'.format(1234567890)

'1,234,567,890'

>>> 'Correct answers: {:.2%}'.format(19/22)

'Correct answers: 86.36%'

8.3. String Formatting Operator

- print ("My name is %s and weight is %d kg!" % ('Zara', 21))

8.4. string literal prefixes

str or strings - immutable sequences of Unicode code points.

- r' R' raw strings

- Raw strings do not treat the backslash as a special character at all. print (r'C:\\nowhere')

- b' B' bytes (NOT str)

- may only contain ASCII characters

- (no term)

- ::

8.5. raw strings, Unicode, formatted

- r'string' - treat backslashes as literal characters

- f'string' or F'string' - f"He said his name is {name!r}." - formatted

8.6. Efficient String Concatenation

- concatination at runtime

#Fastest: s= ''.join([`num` for num in xrange(loop_count)]) def g(): sb = [] for i in range(30): sb.append("abcdefg"[i%7]) return ''.join(sb) print g() # abcdefgabcdefgabcdefgabcdefgab

8.7. byte string

b''

- byte string tp unicode : str.decode()

- unicode to byte string: str.encode('')

Your string is already encoded with some encoding. Before encoding it to ascii, you must decode it first. Python is implicity trying to decode it (That's why you get a UnicodeDecodeError not UnicodeEncodeError).

9. Classes

- Class object - support two kinds of operations: attribute references and instantiation.

- Instance object - attribute references - data and methods

there is data attributes correspond to “instance variables” in Smalltalk, and to “data members” in C++. - - static varible - shared by each instance.

- instance varibles may be reassigned

- instance methods may be reassigned to any method or function. it is just an alias

object - parent for all classes

- __class__ - class of instance

- __init__

- __new__

- __init_subclass__

- 'delattr', 'dir', 'doc', 'eq', 'format', 'ge', 'getattribute', 'gt', 'hash', 'le', 'lt', 'ne', 'reduce', 'reduce_ex', 'repr', 'setattr', 'sizeof', 'str', 'subclasshook'

9.1. basic

class MyClass: a=None c = MyClass() c.a = 3 # instance class MyClass: """MyClass.i and MyClass.f are valid attribute references""" i = 12345 # class value def __init__(self, a): self.i = a # create new object value def f(self): print("f") x = MyClass(2) # instance ERROR! x.a = 3; # data attibute print(x.a) print(x.i) print(MyClass.i) print(x.f) print(MyClass.f) # MyClass.f and x.f — it is a method object, not a function object.

3 2 12345 <bound method MyClass.f of <__main__.MyClass object at 0x7f37165d4790>> <function MyClass.f at 0x7f37165c5440>

class Dog: kind = 'canine' # class variable shared by all instances tricks = [] # static! def __init__(self, name): self.name = name # instance variable unique to each instance #-------------- class method : class C: : @classmethod : def f(cls, arg1, arg2, ...): ... #May be called for class C.f() or for instance C().f() For derived class # derived class object is passed as the implied first argument.

9.2. Special Attributes

- instance.__class__ - The class to which a class instance belongs.

- class.__mro__ or mro() - This attribute is a tuple of classes that are considered when looking for base classes during method resolution.

- class.__subclasses__() - Each class keeps a list of weak references to its immediate subclasses.

Class -name The class name.

- __module__ The name of the module in which the class was defined.

- __dict__ The dictionary containing the class’s namespace.

- __bases__ A tuple containing the base classes, in the order of their occurrence in the base class list.

- __doc__ The class’s documentation string, or None if undefined.

- __annotations__ A dictionary containing variable annotations collected during class body execution. For best practices on working with annotations, please see Annotations Best Practices.

- __new__(cls,…) - static method - special-cased so you need not declare it as such. The return value of

__new__() should be the new object instance (usually an instance of cls).

- typically: super().__new__(cls[, …]) with appropriate arguments and then modifying the newly-created instance as necessary before returning it.

- then the new instance’s __init__() method will be invoked

- __call__(self,…)

Class instances

- super() - Return a proxy object that delegates method calls to a parent or sibling class of type

9.3. inheritance

9.3.1. Constructor

- classes whose base class is object should not call super().__init__()

- class inherited from object by default

- you should never write a class that inherits from object and doesn't have an init method

designed for cooperative inheritance: class CoopFoo: def __init__(self, *args, **kwargs): super().__init__(*args, **kwargs) # forwards all unused arguments

super(type, object-or-type)

- type - get parent or sibling of type

- object-or-type.mro() determines the method resolution order to be searched

super(self.__class__, self) == super()

9.3.2. Subclassing:

- direct - a - b

- indirect - a - b - c

- virtual - abstract base class

class SubClassName (ParentClass1[, ParentClass2, ...]): 'Optional class documentation string' class_suite

9.3.3. built-in functions that work with inheritance:

- isinstance(obj, int) - True only if obj.__class__ is int or some class derived from int

- issubclass(bool, int) - True since bool is a subclass of int

- type(ins) == a.__class__

- type(ins) is Class_name

- isinstance(ins, Class_name)

- issubclass(ins.__class__, Class_name)

- class.mro() - get class.__mro__ attribute

9.3.4. example

class aa(): def __init__(self, aaa, vv): self.aaa = aaa self.vv = vv def get(self): print(self.aaa + self.vv) class bb(aa): def __init__(self, aaa, *args, **kwargs): super().__init__(aaa, *args, **kwargs) self.aaa = aaa +'asd' s = bb('aa', 'vv') s.get() >> aaasdvv

9.3.5. Multiple inheritance - left-to-right

- Method Resolution Order (MRO) (какой метод вызывать из родителей) changes dynamically to support cooperative calls to super() (class.__mro__) (obj.__class__.__mro__)

__spam textually replaced with _classname__spam - в родительском классе при наследовании

9.3.6. Abstract class (ABC - abstract base class)

- https://www.python.org/dev/peps/pep-3119/

- Numbers https://www.python.org/dev/peps/pep-3141/

- abc https://docs.python.org/3/library/abc.html

Notes:

- Dynamically adding abstract methods to a class, or attempting to modify the abstraction status of a method or class once it is created, are not supported.

from abc import ABCMeta class MyABC(metaclass=ABCMeta): @abstractmethod def foo(self): pass # or from abc import ABC class MyABC(ABC): @abstractmethod def foo(self): pass class B(A): def __init__(self, first_name, last_name, salary): super().__init__(first_name, last_name) # if A has __init__ self.salary = salary def foo(self): return true

9.3.7. Virtual sublasses

Virtual subclass - subclass and their descendants of ABC. Made with register method which overloading isinstance() and issubclass()

class MyABC(metaclass=ABCMeta): pass MyABC.register(tuple) assert issubclass(tuple, MyABC) # tuple is virtual subclass of MyABC now

9.3.8. calling parent class constructor

9.4. Getters and setters

- no private variables

@property - pythonic way

class Celsius: def __init__(self, temperature = 0): self.temperature = temperature def to_fahrenheit(self): return (self.temperature * 1.8) + 32 def get_temperature(self): print("Getting value") return self._temperature def set_temperature(self, value): if value < -273: raise ValueError("Temperature below -273 is not possible") print("Setting value") self._temperature = value temperature = property(get_temperature,set_temperature) >>> c.temperature Getting value 0 >>> c.temperature = 37 Setting value #----------- OR ------ class Celsius: def __init__(self, temperature = 0): self.temperature = temperature def to_fahrenheit(self): return (self.temperature * 1.8) + 32 @property def temperature(self): print("Getting value") return self._temperature @temperature.setter def temperature(self, value): if value < -273: raise ValueError("Temperature below -273 is not possible") print("Setting value") self._temperature = value

9.5. Polymorphism [pɔlɪˈmɔːfɪzm

inheritance for shared behavior, not for polymorphism

class Square(object): def draw(self, canvas): pass class Circle(object): def draw(self, canvas): pass shapes = [Square(), Circle()] for shape in shapes: shape.draw('canvas')

9.6. Protocols or emulation

Это переопределение скрытых методов, которые позволяют использовать класс в конструкциях.

| Protocol | Methods | Supports syntax |

|---|---|---|

| Sequence | slice in getitem etc. | seq[1:2] |

| Iterators | __iter__, next | for x in coll: |

| Comparision | __eq__, gt etc. | x == y, x > y |

| Numeric | __add__, sub, and, etc. | x+y, x-y, x&y .. |

| String like | __str__, unicode, repr | print(x) |

| Attribute access | __getattr__, setattr | obj.attr |

| Context managers | __enter__, exit | with open('a.txt') as f:f.read() |

9.7. private and protected

- public - all

- Protected: _property

- Provate: __property

9.8. object

object() or object - base for all clases

dir(object())

['class', 'delattr', 'dir', 'doc', 'eq', 'format', 'ge', 'getattribute', 'gt', 'hash', 'init', 'init_subclass', 'le', 'lt', 'ne', 'new', 'reduce', 'reduce_ex', 'repr', 'setattr', 'sizeof', 'str', 'subclasshook']

- __dict__ − Dictionary containing the class's namespace.

- __doc__ - docstring

- __init__ - constructor

- __str__ - toString() - Return a string version of object

- __name_ - Class name

- __module__ - Module name in which the class is defined. This attribute is "main" in interactive mode.

- __bases__ − A possibly empty tuple containing the base classes, in the order of their occurrence in the base class list.

- __hash__' - hashcode()

- __repr__ - string printable representation of an object

9.9. Singleton

- simple

- отложенный

- Singleton на уровне модуля - Все модули по умолчанию являются синглетонами

9.9.1. example

class Singleton(object): def __new__(cls): if not hasattr(cls, 'instance'): cls.instance = super(Singleton, cls).__new__(cls) return cls.instance # Отложенный экземпляр в Singleton class Singleton: __instance = None def __init__(self): if not Singleton.__instance: print(" __init__ method called..") else: print("Instance already created:", self.getInstance()) @classmethod def getInstance(cls): if not cls.__instance: cls.__instance = Singleton() return cls.__instance

9.9.2. шаблон Monostate

чтобы экземпляры имели одно и то же состояние

class Borg: __shared_state = {"1": "2"} def __init__(self): self.x = 1 self.__dict__ = self.__shared_state pass b = Borg() b1 = Borg() b.x = 4 print("Borg Object 'b': ", b) ## b and b1 are distinct objects print("Borg Object 'b1': ", b1) print("Object State 'b':", b.__dict__)## b and b1 share same state print("Object State 'b1':", b1.__dict__) >> ("Borg Object 'b': ", <__main__.Borg instance at 0x10baa5a70>) >> ("Borg Object 'b1': ", <__main__.Borg instance at 0x10baa5638>) >> ("Object State 'b':", {'1': '2', 'x': 4}) >> ("Object State 'b1':", {'1': '2', 'x': 4})

9.10. anonumous class

9.10.1. 1

class Bunch(dict): getattr, setattr = dict.get, dict.__setitem__

dict(x=1,y=2) or {'x':1,'y':2}

Bunch(dict())

9.11. replace method

class A(): def cc(self): print("cc") c = A.cc def ff(self): print("ff") c(self) A.cc = ff a = A() a.cc()

ff cc

class A(): def cc(self): print("cc") a = A() c = a.cc def ff(self): print("ff") c() A.cc = ff a = A() a.cc()

ff cc

10. modules and packages

- module - file

- package - folder - must have: init.py to be able to import folder as a module.

- __main__.py - allow to execute folder: python -m folder

module can define

- functions

- classes

- variables

- runnable code.

When a module is imported (anyhow) into a script, the code in the top-level portion of a module is executed only once.

Import whole file - обращаться с файлом -

import module1[, module2[,... moduleN]

import support #just a file support.py

support.print_func("Zara")

Import specific thing from file to access without module

from modname import name1[, name2[, ... nameN]] from modname import *

__name__ - name of this module.

Locating Modules:

- current dir

- PYTHONPATH - shell variable - list of directories

- default path. On UNIX usr/local/lib/python3

build-in functions

- dir(math) - list of strings containing the names defined by a module or in current

- locals() - within a function, it will return all the names that can be accessed locally from that function (dictionary)

- global() return dictionary type

- reload(module) reexecute the top-level code of module.

To make all of your functions available when you have imported Phone:

from Pots import Pots from Isdn import Isdn from G3 import G3

Main

def main(args):pass

if __name__ == '__main__': #name of module-namespace. '__main__' for - $python a.py

import sys

main(sys.argv)

quit()

10.1. module special attributes (Module level "dunders") [-ʌndə(ɹ)]

- __name__

- __doc__

- __dict__ - module’s namespace as a dictionary object

- __file__ - is the pathname of the file from which the module was loaded, if it was loaded from a file.

- __annotations__ - optional - dictionary containing variable annotations collected during module body execution

11. folders/files USECASES

- list files and directories deepth=1: os.listdir()->list

- list only files deepth=1 os.listdir() AND os.path.isfile()

12. functions

- python does not support method overloading

- Можно объявлять функции внутри функций

- Функции видят область где они определены, а не где вызваны.

- Если функция ничего не возвращает, то возвращает None

- Функция может возвращать return a, b = (a,b) котороые присваиваются нескольким переменным : a,b = c()

12.1. by value or by reference

by value:

- immutable:

- strings

- integers

- tuples

- others…

by reference:

- muttable:

- objects

- lists, sets, dicts

12.2. Types of Аргументы функции

- Positional arguments (first, second, third=None, fourth=None) (first, second) - positional, (third, fourth) - Keyword arguments

- Keyword arguments - printinfo( age = 50, name = "miki" ) - order does not metter

- Default arguments - def printinfo( name, age = 35 ):

- Variable-length or Arbitrary Argument Lists positional arguments

def printinfo( arg1, *vartuple ):

for var in vartuple:

print (var)

printinfo (1, 'asd','d31', 'cv')

- Variable-length or Arbitrary Argument Lists Keyword arguments

def save_ranking(**kwargs):

print(kwargs)

save_ranking(first='ming', second='alice', fourth='wilson', third='tom', fifth='roy')

>>> {'first': 'ming', 'second': 'alice', 'fourth': 'wilson', 'third': 'tom', 'fifth': 'roy'}

- both

def save_ranking(*args, **kwargs):

save_ranking('ming', 'alice', 'tom', fourth='wilson', fifth='roy')

12.3. example

def functionname( parameters:type ) -> return_type: "function_docstring" function_suite return [expression] def readit(file :str, fun :callable) ->list:

12.4. arguments, anonymous-lambda, global variables

Anonymous Functions: - one-line version of a function

lambda [arg1 [,arg2,.....argn]]:expression (lambda x, y: x + y)(1, 2)

global variables can be accessesd from all functions (except lambda??? - working in console)

# global Money # Uncomment to replace local Money to global. Money = Money + 1 #local

12.5. attributes

User-defined function

- __doc__

- __name__

- __qualname__

- __module__

- __defaults__

- __code__

- __globals__

- __dict__

- __closure__

- __annotations__

__kwdefaults__

Instance methods: read-only attributes:

- __self__ - class instance object

- __func__ - function object

- __module__ - name of the module the method was defined in

12.6. function decorators

- https://docs.python.org/3/glossary.html#term-decorator

- https://www.thecodeship.com/patterns/guide-to-python-function-decorators/

function that get one function and returning another function

- when you need to extend the functionality of functions that you don't want to modify

- @classmethod

Typically used to catch exceptions in wrapper

def p_decorate(f): def inner(name): # wrapper # do something here! f() # we call wrapped function return inner my_get_text = p_decorate(get_text) # обертываем, теперь my_get_text("John") #о бертка вернет и вызовет вложенную #syntactic sugar @p_decorate def get_text(name): return "bla " + name #------------- get_text = div_decorate(p_decorate(strong_decorate(get_text))) # Equal to @div_decorate @p_decorate @strong_decorate #-------------- Passing arguments to decorators ------ def tags(tag_name): def tags_decorator(func): def func_wrapper(name): return "<{0}>{1}</{0}>".format(tag_name, func(name)) return func_wrapper return tags_decorator @tags("p") def get_text(name): return "Hello "+name def get_text(name):

12.7. build-in

https://docs.python.org/3/library/functions.html

- abs(x)

- absolute value

- all(iterable)

- all elements of the iterable are true or empty = true

- any(iterable)

- any element is true or empty = false

- ascii(object)

- printable representation of an object

- breakpoint(*args, **kws)

- drops you into the debugger at the call site. calls sys.breakpointhook() which calls calls pdb.set_trace()

- callable(object)

- if the object - callable type - true. (classes are callable )

- @classmethod

- function decorator. May be called for class C.f() or for instance C().f() For derived class derived class object is passed as the implied first argument.

class C: @classmethod def f(cls, arg1, arg2, ...): ...

- compile(source, filename, mode, flags=0, dont_inherit=False, optimize=-1)

- into code or AST object - can be executed by exec() or eval(). Mode - 'exec' if source consists of a sequence of statements. 'eval' if it consists of a single expression

- delattr(object, name)

- like setattr() - delattr(x, 'foobar') is equivalent to del x.foobar.

- divmod(a, b)

- ab-two (non complex) numbers = quotient and remainder when using integer division

- enumerate(iterable, start=0)

- return iterator which returns tuple (0, arg1), (1,arg1) ..

- eval(expression, globals=None, locals=None)

- string is parsed and evaluated as a Python expression . The globals() and locals() functions returns the current global and local dictionary, respectively, which may be useful to pass around for use by eval() or exec().

- exec(object[, globals[, locals]])

- object must be either a string or a code object. Be aware that the return and yield statements may not be used outside of function definitions even within the context of code passed to the exec() function. The return value is None.

- filter(function, iterable)

- Construct an iterator from those elements of iterable for which function returns true.

- getattr(object, name[, default])

- eturn the value of the named attribute of object. name must be a string or AttributeError is raised

- setattr(object, name, value)

- assigns the value to the attribute, provided the object allows it

- globals()

- dictionary representing the current global symbol table (inside a function or method, this is the module where it is defined, not the module from which it is called)x

- hasattr(object, string name)

- result is True if the string is the name of one of the object’s attributes, False if not

- hash(object)

- Hash values are integers. Object __hash__() method.

- id(object)

- “identity” of an object - integer. Unique and constant during life time. Two objects with non-overlapping lifetimes may have the same id() value.

- isinstance(object, classinfo)

- True if object is an instance of the classinfo argument.

- issubclass(class, classinfo)

- true if class is a subclass of classinfo. class is considered a subclass of itself

- iter(object[, sentinel])

- 1) Return an iterator object. __iter__() or __getitem__() 2) object must be a callable object __next__() if the value returned is equal to sentinel, StopIteration will be raised

- next(iterator[, default])

- __next__() If default is given, it is returned if the iterator is exhausted

- len(s)

- .

- map(function, iterable, …)

- Return an iterator that applies function to every item of iterable. May be applied in parallel to may iterable.

- max/min(iterable, *[, key, default])

- .

- max/min(arg1, arg2, *args[, key])

- largest item in an iterable or the largest of two or more arguments

- memoryview(obj)

- memory view” object

- pow(x, y[, z])

- (x** y) % z

- repr(object)

- __repr__() method - printable representation of an object

- reversed(seq)

- __reversed__() method or support sequence protocol (the __len__() method and the __getitem__()

- round(number[, ndigits])

- number rounded to ndigits precision after the decimal point

- sorted(iterable, *, key=None, reverse=False)

- sorted list [] from the items in iterable

- @staticmethod

- method into a static method.

- sum(iterable[, start])

- returns the total

- super([type[, object-or-type]])

- Return a proxy object that delegates method calls to a parent/parents or sibling class of type

- vars([object])

- __dict__ attribute for a module, class, instance, or any other object

- zip(*iterables)

- Make an iterator of tuples that aggregates elements from each of the iterables.

- list(zip([1, 2, 3],[1, 2, 3])) = [(1, 1), (2, 2), (3, 3)]

- unzip: list(zip(*zip([1, 2, 3],[1, 2, 3]))) = [(1, 2, 3), (1, 2, 3)]

- __import__(name, globals=None, locals=None, fromlist=(), level=0)

- not needed in everyday Python programming

- class bool([x])

- standard truth testing procedure see 6.4

- class bytearray([source[, encoding[, errors]]])

- -mutable If it is a string, you must also give the encoding - it will use str.encode()

- class bytes([source[, encoding[, errors]]])

- -immutable

- class complex([real[, imag]])

- complex('1+2j'). - default - 0j

- class dict(**kwarg)

- dict(one=1, two=2, three=3) = {'one': 1, 'two': 2, 'three': 3}; dict([('two', 2), ('one', 1), ('three', 3)])

- class dict(mapping, **kwarg)

- ????

- class dict(iterable, **kwarg)

- dict(zip(['one', 'two', 'three'], [1, 2, 3]))

- class float([x])

- from a number or string x.

- class frozenset([iterable])

- see 6.3.

- class int([x])

- x.__int__() or x.__trunc__().

- class int(x, base=10)

- .

- class list([iterable])

- .

- class object

- Return a new featureless object.

- class property(fget=None, fset=None, fdel=None, doc=None)

- class range(stop)

- class range(start, stop[, step])

- immutable sequence type

- class set([iterable])

- .

- class slice(stop)

- .

- class str(object='')

- .

- class str(object=b'', encoding='utf-8', errors='strict')

- .

- tuple([iterable])

- .

- class type(object)

- object.__class__

- class type(name, bases, dict)

- .

- input([prompt])

- return input input from stdin.

- open(file, mode='r', buffering=-1, encoding=None, errors=None, newline=None, closefd=True, opener=None)

- Open file and return a corresponding file object.

- print(*objects, sep=' ', end='\n', file=sys.stdout, flush=False)

- to file or sys.stdout

- dir([object])

- list of valid attributes for that object. or list of names in the current local scope. __dir__() - method called - dir() - Is supplied primarily as a convenience for use at an interactive prompt

- help([object])

- built-in help system

- locals()

- the current local symbol table

- bin(x)

- bin(3) -> '0b11'

- chr(i)

- Return the string representing a character = i - Unicode code

- hex(x)

- hex(255) = '0xff'

- format(value[, format_spec])

- https://docs.python.org/3/library/string.html#formatspec

- oct(x)

- Convert an integer number to an octal string prefixed with “0o”.

- ord(c)

- c - string representing one Unicode character. Return integer.

12.8. Closure

def compose_greet_func(name): def get_message(): return "Hello there "+name+"!" return get_message greet = compose_greet_func("John") print(greet())

12.9. overloading

from functools import singledispatch @singledispatch def func(arg1, arg2): print("default implementation of func - ", arg1, arg2) @func.register def func_impl_1(arg1: str, arg2): print("Implementation of func with first argument as string - ", arg1, arg2) @func.register def func_impl_2(arg1: int, arg2): print("Implementation of func with first argument as int - ", arg1, arg2) func(1, "hello") func("test", "hello") func(1.34, "hi")

Implementation of func with first argument as int - 1 hello Implementation of func with first argument as string - test hello default implementation of func - 1.34 hi

13. asterisk(*)

- For multiplication and power operations.

- 2*3 = 6

- 2**3 = 8

- For repeatedly extending the list-type containers.

- (0,) * 100

- For using the variadic arguments. "Packaging" - def save_ranking(*args, **kwargs):

- *args - tuple

- **kwargs - dict

- For unpacking the containers.(so-called “unpacking”) чтобы передать список в variadic arguments

def product(*numbers): product(*[2, 3, 5, 7, 11, 13])

- for arguments of function. all after * - keyword ony, after / - positional or keyword only

def another_strange_function(a, b, /, c, *, d):

14. with

with ContexManager() as c1, ContexManager() as c2:

14.1. Context manager class TEMPLATE

class DatabaseConnection(object): def __enter__(self): # make a database connection and return it ... return self.dbconn def __exit__(self, exc_type, exc_val, exc_tb): # make sure the dbconnection gets closed self.dbconn.close()

15. Operators and control structures

Ternary operation: a if condition else b

15.1. basic

Arithmetic

- + - *

- / - 9/2 = 4,5 - Division

- % - 9%2 = 1 - Modulus - returns remainder

- ** - Exponent

- // - Floor Division 9 //2 = 4 -9/2 = -5

- += -= *= /= %= **= //=

Comparison

= ! <> > < >= <=

Bitwise

- &

- |

- ^ - XOR

- ~ - ~a = 1100 0011

- << - a<<2 = 1111 0000

- >>

Logical - AND - OR - NOT

Membership - in, not in

Identity Operators ( point to the same object) - is, is not

15.2. Operator Precedence (Приоритет) ˈpresədəns

https://docs.python.org/3/reference/expressions.html#operator-precedence

- Binding or parenthesized expression, list display, dictionary display, set display

- (expressions…),

- [expressions…], {key: value…}, {expressions…}

- Subscription, slicing, call, attribute reference

- x[index], x[index:index], x(arguments…), x.attribute

- await x - Await expression

- ** - Exponentiation [5]

- +x, -x, ~x - Positive, negative, bitwise NOT

- *, @, , /, % - Multiplication, matrix multiplication, division, floor division, remainder [6]

- +, - - Addition and subtraction

- <<, >> - Shifts

- & - Bitwise AND

- ^ - Bitwise XOR

- | - Bitwise OR

- in, not in, is, is not, <, <=, >, >=, !=, == - Comparisons, including membership tests and identity tests

- not x - Boolean NOT

- and - Boolean AND

- or - Boolean OR

- if – else - Conditional expression

- lambda - Lambda expression

- := - Assignment expression

old:

- **

- ~ + - unary

- * / % //

- + -

- >> <<

- &

- ^ |

- <= < > >=

- <>

= !Equality operators - = %= /= //= -= += *= **= Assignment operators

- is is not

- in not in

- not or and - Logical operators

15.3. value unpacking

x=("v1", "v2") a,b = x print a,b # v1 v2 T=(1,) b,=T # b= 1

15.4. if, loops

if expression1: statement(s) elif statement(s): statement(s) while expression: statement(s) while count < 5: print count, " is less than 5" count = count + 1 else: # when the condition becomes false or at the end print count, " is not less than 5" for iterating_var in sequence: statements(s) else: # when no break encountered print num, 'is a prime number' break # Terminates the loop continue # skip the remainder pass # null operation - just stupid empty operator - nothing else. #Compcat loops, double loop [print(x,y) for x in range(1000) for y in range(x, len(range(1000)))] [g for g in [x['whole_word_timestamps'] for x in whisper_stable_result]] # list created everyloop for item in array: array2.append (item)

15.5. match 3.10

command = input("What are you doing next? ") match command.split(): case [action]: ... # interpret single-verb action case [action, obj]: ... # interpret action, obj case ["quit"]: print("Goodbye!") quit_game()

15.6. Slicing Sequence

- a[i:j] - i to j

- s[i:j:k] - slice i to j with step k;

s = range(10) - [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

- s[-2] - = 8

- s[1:] - [1, 2, 3, 4, 5, 6, 7, 8, 9]

- s[1::] - [1, 2, 3, 4, 5, 6, 7, 8, 9]

- s[:2] - [0, 1]

- s[:-2] - [0, 1, 2, 3, 4, 5, 6, 7]

- s[-2:] - [8, 9]

- s[::2] - [0, 2, 4, 6, 8]

- s[::-1] -[9, 8, 7, 6, 5, 4, 3, 2, 1, 0]

16. Traverse or iteration over containers

- see 17.1

16.1. iterator object

Behind the scenes for statement calls iter()- iterator object

- __next__() - when nothig left - raises a StopIteration exception.

#remove in loop: https://docs.python.org/3/reference/compound_stmts.html#the-for-statement for f in ret[:]: ret.remove(f) for element in [1, 2, 3]: print(element) for element in (1, 2, 3): print(element) for key in {'one':1, 'two':2}: print(key) for char in "123": print(char) for line in open("myfile.txt"): print(line, end='') class Reverse: # add iterator behavior to your classes """Iterator for looping over a sequence backwards.""" def __init__(self, data): self.data = data self.index = len(data) def __iter__(self): return self def __next__(self): if self.index == 0: raise StopIteration self.index = self.index - 1 return self.data[self.index] rev = Reverse('spam') for char in rev: print(char) #compact form >>> t = {x: x*x for x in range(0, 4)} >>> print(t) {0: 0, 1: 1, 2: 4, 3: 9}

16.2. iterate dictionary

- for key in a_dict:

- for item in a_dict.items(): - tuple

- for key, value in a_dict.items():

- for key in a_dict.keys():

- for value in a_dict.values():

Since Python 3.6, dictionaries are ordered data structures, so if you use Python 3.6 (and beyond), you’ll be able to sort the items of any dictionary by using sorted() and with the help of a dictionary comprehension:

- sorted_income = {k: incomes[k] for k in sorted(incomes)}

- sorted() - sort keys

17. The Language Reference

17.1. yield and generator expression

form of coroutine

- (expression comp_for) - (x*y for x in range(10) for y in range(x, x+10)) = <generator object>

Yield - используется для создания генератора. используется для создания лопа.

- используется только в функции.

- как return только останавливается после возврата если в лупе или в других случаях

- async def - asynchronous generator - not iterable - <async_generator object -(Coroutine objects)

- async gen - not implement iter and next methods

17.2. yield from

allow to

def gen_list1(iterable): for i in list(iterable): yield i # equal to: def gen_list2(iterable): yield from list(iterable)

17.3. ex

def agen(): for n in range(1, 10): yield n [1, 2, 3, 4, 5, 6, 7, 8, 9] def a(): for n in range(1, 3): yield n def agen(): for n in range(1, 7): yield from a() [1, 2, 1, 2, 1, 2, 1, 2, 1, 2, 1, 2] #------------------------- async def ticker(delay, to): """Yield numbers from 0 to *to* every *delay* seconds.""" for i in range(to): yield i await asyncio.sleep(delay)

17.4. function decorator

#+name example_1

def hello(func): def inner(): print("Hello ") func() return inner @hello def name(): print("Alice")

#+name exampl_2

def star(n): def decorate(fn): def wrapper(*args, **kwargs): print(n*'*') result = fn(*args, **kwargs) print(result) print(n*'*') return result return wrapper return decorate @star(5) def add(a, b): return a + b add(10, 20)

17.5. class decorator

- print(f.__name__) of wrapper

print(f.__doc__) of wrapper

#+name ex1

from functools import wraps class Star: def __init__(self, n): self.n = n def __call__(self, fn): @wraps(fn) # addition to fix f.__name__ and __doc__ def wrapper(*args, **kwargs): print(self.n*'*') result = fn(*args, **kwargs) print(result) print(self.n*'*') return result return wrapper @Star(5) def add(a, b): return a + b # or add = Star(5)(add) add(10, 20)

17.6. lines

new line

- Конец строки - Unix LF, Windows CR LF, Macintosh CR - All of these forms can be used equally, regardless of platform

- In Python - C conventions for newline characters - \n - ASCII LF

Comments

# - line """ comment """ - multiline

Line joining - cannot carry a comment

if 1900 < year < 2100 and 1 <= month <= 12 \ and 1 <= day <= 31 and 0 <= hour < 24 # Looks like a valid date

Implicit line joining

month_names = ['Januari', 'Februari', 'Maart', #you can

'Oktober', 'November', 'December'] #do it

Blank line - contains only spaces, tabs, formfeeds(FFor \f) and possibly a comment

17.7. Indentation

- Leading whitespace (spaces and tabs)

- determine the grouping of statements

- TabError - if a source file mixes tabs and spaces in a way that makes the meaning dependent on the worth of a tab in spaces

Tabs are replaced - 1-7

17.8. identifier [aɪˈdentɪfaɪər] or names

[A-Za-z_(0-9 except for firest char)] - case sensitive

Reserved classes of identifiers

- _*

- \_\_\*\_\_

- __*

17.9. Keywords Exactly as written here:

| False | await | else | import | pass |

| None | break | except | in | raise |

| True | class | finally | is | return |

| and | continue | for | lambda | try |

| as | def | from | nonlocal | while |

| assert | del | global | not | with |

| async | elif | if | or | yield |

17.10. Numeric literals

- integers

- floating point numbers - 3.14 10. .001 1e100 3.14e-10 0e0 3.14_15_93